The Jetsons had Rosie, Tony Stark had J.A.R.V.I.S, and the crew of the USS Enterprise could always count on Data.

Humans have long dreamed of artificial intelligence and smart machines that can help us make better decisions, or even simply cut down on our chores. What was once a sci-fi fantasy is now a reality – Artificial Intelligence (AI) is an increasing presence in our day-to-day lives, with Siri on your iPhone, Alexa on your kitchen bench, and Lil Miquela in your Instagram feed.

But AI is built by humans, and its algorithms are sometimes guided by very human biases. The more we rely on automated decision-making, the more often we see examples of AI being sexist, racist, or exhibiting other forms of prejudice.

How did assumptions and biases find their way into machines? As groups around the world fight for social equality, is AI helping or hurting our progress? How do we hold designers accountable for their creations? And can we address these issues without overcorrecting into social engineering?

In the first What Happens Next? episode on bias in AI, Dr Susan Carland speaks to communications and media studies expert Professor Mark Andrejevic, human-computer interaction scholar Yolande Strengers, Monash University Interim Dean of Information Technology Ann Nicholson, and Microsoft Australia’s former chief digital advisor, Rita Arrigo.

“If there's stereotypes, or biases, or features in the pictures we're giving them or the speech we're using, that AI system will learn it. It can't make those ethical judgements. We who are training them up have to change the way we put the data in, and try and remove those biases so we don't produce a biased AI system.”

Professor Ann Nicholson

What Happens Next? will be back next week with part two of this series.

If you’re enjoying the show, don’t forget to subscribe on your favourite podcast app, and rate or review What Happens Next? to help listeners like yourself discover it.

Transcript

Dr. Susan Carland: Welcome to another episode of What Happens Next?, I'm Dr Susan Carland. On this sliding doors podcast we examine the biggest challenges confronting us today, looking at the future we face if we don't change, the future we could have if we do, and how we get the kind of future we all want. This week we will be looking at the role of bias in artificial intelligence or AI. How do we make sure we remove human prejudice when building AI systems?

Our guests are Professor of Communications and Media Studies in the Faculty of Arts at Monash University Mark Andrejevic; and human-computer interaction scholar Yolande Strengers; Dean of IT at Monash, Professor Ann Nicholson; and former Chief Digital Advisor at Microsoft Australia Rita Arrigo.

Mark Andrejevic: I'm Mark Andrejevic, I'm a professor in the School of Media, Film and Journalism here at Monash University.

Dr Susan Carland: Mark, welcome back. I wondered how you would define AI, or artificial intelligence.

Mark Andrejevic: Oh, it's quite a vexed question. Many of the things that we describe as AI are probably what I wouldn't think of as AI. They tend to be automated processes that are prescripted. A machine generates a decision of some kind but it's not really doing anything that we might think of as intelligence. It's crunching through some algorithms and some data and yielding a prescripted output, so it looks like it's generating something that seems like maybe it involves some type of contemplation on its part but it doesn't.

Typically, when we describe AI we're thinking about processes in which the machines engage in forms of developing responses that are not prescripted in advance, and very often those are associated with technologies that are called ‘neural nets’ where basically you feed in – let's say you want to train a system to recognise cats, a standard internet example. You supply it with a large database of images of cats, and then you have it basically go through a process of trial and error, figuring out what are the attributes that are in common in these images. And it's something where the machine, through a process that's not prescripted, starts to learn which things work and which things don't, so they call it training the algorithm.

That gets closer to what we might think of as a form of artificial intelligence. More technically, I suppose it would be called machine learning. When you get to the notion of artificial intelligence in its, I suppose, purest abstract sense, it's the ability for machines to engage in the type of cognitive activities that we associate with thinking and evaluating and making decisions. And it's still not clear whether machines have even approached that level of what we might mean as AI. The closest we can get I think is at the moment – there may be some folks who disagree with me – but the closest is this kind of machine learning process where they start to figure things out in ways that we're just not sure what they're doing, but they're able to then, in some cases with a very high degree of accuracy, complete the tasks that they're being asked to complete. But still they're being asked to complete tasks.

As thinking beings, we tend to imagine that for ourselves, we're able to set ourselves our own tasks, and so the notion of the so-called general artificial intelligence would be a machine that was autonomous enough to be able to set itself its own tasks. And that's something that's still, from my understanding, quite far off in terms of what we might mean by artificial intelligence. So we could think of localised artificial intelligence: do this task, figure out how to recognise these images, or see if you can predict the weather based on data more accurately than other forecasting systems. That's a dedicated task that machines can learn how to do given enough data and if there's enough regularity for it to detect, but to get a machine to figure out why it might want to know the weather, that's still a level of intelligence beyond what we think machines are capable of at the moment.

Dr Susan Carland: Yolande Strengers is the co-author of the book The Smart Wife: Why Siri, Alexa, and Other Smart Home Devices Need a Feminist Reboot. Yolande believes that female voices on our smart phones and networked home devices such as Google Home are recreating an old fashioned feminine stereotype, where a little lady can be called upon to help us out.

Yolande, welcome. What do you see as the main problems with artificial intelligence?

Yolande Strengers: I think the main problem that I've been focused on is how they represent women's bodies and femininity in quite a problematic way that creates issues around sexism, around how we think about women and the kinds of roles that they're in, and how women behave and look as well. And when I say that I'm speaking about devices like digital voice assistants, like social robots, like assistants and chatbots, and now also virtual reality, and even things like sex robots. There's a huge spectrum in which women's bodies are being problematically represented, and that's really where my focus is and my concern lies.

Dr Susan Carland: You've written a book called The Smart Wife. What do you mean by that term, smart wife?

Yolande Strengers: That's right. Jenny Kennedy and I, with that term, what we really wanted to draw attention to was the number of devices that are coming into our lives that are behaving like, or designed to behave like stereotypical 1950s housewives. And that might be in the way they sound, it might be in the way they respond to us and the kinds of in-service kind of personalities that they might have, but it could also be in their personalities, in their form, and even in the types of work that they're being brought in to do.

So if you think about a lot of feminised AI, it's coming in to do roles that were traditionally considered women's work, things like administrative tasks, housework, keeping everyone's to-do schedules on track. There's a kind of void being filled that has traditionally fallen to women that is being taken up by these kinds of technologies.

Dr Susan Carland: Do we see similarly stereotypical masculine or male AI, like AI that has a man's voice in an area that we might be more conditioned to thinking of men as authoritative or common?

Yolande Strengers: Absolutely. I think there's equally problematic stereotypes on the masculine side of things, and sci-fi is a really great place to look for some of these stereotypes and they certainly translate into the design of technologies. So if you look at the masculine stereotypes around men in sci-fi, it's Terminator and killer robots, and very problematic ideals that you definitely wouldn't want to associate with your digital voice assistant. But equally problematic are the ones around the feminine representations of women we see in sci-fi, and how they're often in a service role or they maybe have some kind of reckoning or some kind of awakening through the course of the film, but usually that then at the end is contained, recontained, in some way.

That's a really nice basis for technology design because we want something that's familiar, that's contained, that's non-threatening, that allows us to accept these devices into our lives. So it's actually quite a clever tactic or strategy on the part of the technology designers to use femininity as a design strategy.

Dr Susan Carland: Have the designers ever commented on why all these AI assistants have female voices by default?

Yolande Strengers: Yeah. So really it's about likability.

Dr Susan Carland: So they've said that?

Yolande Strengers: Some of them have. I mean, they have different ways of explaining it, but that's my synthesis of what I've seen them say, is that it's a way to get more people accepting them into our lives. I mean, ultimately they're there to sell products, they're there to get their products accepted and used and embedded in our lives, and femininity, or the femininity they use, which is a very non-threatening form and a very compliant sort of form is, is a great way of getting everybody to get on board. It also is familiar with our stereotypes. So it's not asking anything or it isn't asking too much of the user. It's familiar terrain for us. If they went with maybe a more quirky personality or a non-binary gender or something else, then perhaps that would appeal to a smaller number of people, or it would be a little bit more threatening or uncomfortable for some users.

Dr Susan Carland: What's wrong with having AI voices that are female?

Yolande Strengers: There's nothing wrong with it, per se. I think the issue that we have with it, and an issue we talk about in our book, is that there's one version of femininity that's really on display here and we're now seeing it embedded in hundreds of millions of devices across different companies, different platforms, different technologies. So you look at the big five technology companies, they're all reproducing this particular form of femininity. And like we have so much fantastic diversity in the world, we wouldn't expect to walk around and just engage with one type of woman in our everyday lives or one type of the feminine. So why would we expect that, or why should we accept that, of our technology at large?

Dr Susan Carland: What do you see as the connecting factors between Alexa or Siri, who I might say, “What's the weather today?” and they answer, all the way to the very realistic female sex robots. What do you see as joining all of this together?

Yolande Strengers: Well, there's a number of elements. I mean, some of it is that they share similar technology. So the type of technology that's going into developing the voice applications for Siri and Alexa and Google Home are also making their way into the voice applications of sex robots. There's also a personality similarity, and not necessarily exactly the same, but certainly that idea that these devices are there to please us, to serve us. They're not there to challenge us in any way, or to shut down or respond in a maybe more affirmative way if they're treated disrespectfully or in a way that we wouldn't tolerate if we were speaking to another person or an actual woman. So that's another kind of similarity.

Dr Susan Carland: What do you think would happen if all the digital assistants suddenly had a man's voice or a black man's voice? How do you think we would respond?

Yolande Strengers: I think it'd be quite challenging. I mean, I don't think this is an easy terrain and I think that we have to be careful not to fall into these very stereotypical inverse solutions, which is, “Oh, we'll just make them all men,” or, “We'll just make them all black people,” or, “We'll make them all gender neutral,” which is another really common strategy. This is complicated, and it's complicated because the feminisation of these devices isn't just in the voice and the name. It's also, as I said, in the types of tasks that we're handballing across to these devices to do, and in their physical form in some ways as well. In their curves, even, that represent them. So I don't think there's an easy fix. I think more of what is needed is a diversification away from the norm. So not just like, “Here's the one solution that we should go for,” but, “Let's experiment with some different forms and different personalities, and let's experiment with what else could be likeable.”

Because we don't want to just go with a stereotypically inverse solution, like, “Oh, let's make them all men,” and then have everyone turn around and go, “Oh, no, I don't like that. It makes me uncomfortable for some reason.” So that's where the experimentation is really necessary to see what else could be possible.

Dr Susan Carland: Professor Ann Nicholson is the dean of IT at Monash University. She says that the data sets we feed into AI can produce biassed systems. She also sees problems with a lack of regulation. Is there any area where you have some concern about the future of AI or even what we're doing now with AI?

Ann Nicholson: Well, one of the problems with these learning from massive data sets is they're learning from the past and they're only learning on the data we give it. So if we give it data, for example, that's more from men than women, will learn men as the norm and so that's why some health systems might not do as well on diagnosing things for women, because they've been trained on data from men. Same things on all the voice and pictures. If there's stereotypes or biases or features in the pictures we're giving them or the speech where using, that AI system will learn it. It can't make those ethical judgements. We who are training them up have to change the way we put the data in and try and remove those biases so we don't produce a biassed AI system.

Dr Susan Carland: So human biases can inadvertently lead to AI biases.

Ann Nicholson: Yep. The AI system cannot know that something's not fair or that it's based on a biassed data set. They just know what they've been given. Whereas we can know that.

Dr Susan Carland: So how do we get around that?

Ann Nicholson: Well, we can have a look at the outputs of the system and then test it against certain cases where we know what we would expect, say, a human to be predicting. Then we can see if there's systematic differences and then we can drill back down and see where they're coming from. Then we can change the data coming in. We can not give it more data on men. We can not give it pictures of people who've got white skin. We can give it the representative sample of skin and so on. So I think that we can do a lot in how we train them and we can evaluate what's coming out and we can just not deploy them if we're not confident about what they'll do.

Dr Susan Carland: Are we as humanity adequately conservative about our deployment?

Ann Nicholson: We're not conservative at all because we just don't have the regulatory frameworks in place to be validating what AI systems get deployed. If you think about what we require for, say, engineering. Engineering has been around a long time. You can't just build a bridge unless it meets specifications, and it's signed off, and people are saying, "Yep, it's not going to fall down under certain conditions." We have nothing like that for AI systems. Some people build their AI systems really well and test them thoroughly and are aware of kind of those boundary cases and so on. And other people just grab the AI software, throw their data at it, and then throw the result model into help make decisions. And they haven't barely been validated and approved in the way that we might expect.

Dr Susan Carland: There's no regulation about AI.

Ann Nicholson: Nope. You can just put an AI system up on the internet and make the URL available, and there's nothing stopping you. I mean, if it was in a certain situation, like someone making medical decisions, the medical decision makers know that they have to be doing best practise, and they wouldn't be using it for those kinds of things. But someone could put an AI app like for self-diagnosis, and we all know we go to Dr Google, if you found an AI system that you thought was helping diagnose, you're seeing there's no regulation on those.

Dr Susan Carland: So what are some of the obvious biases we see built into AI systems?Professor [Mark Andrekovich 00:17:09].

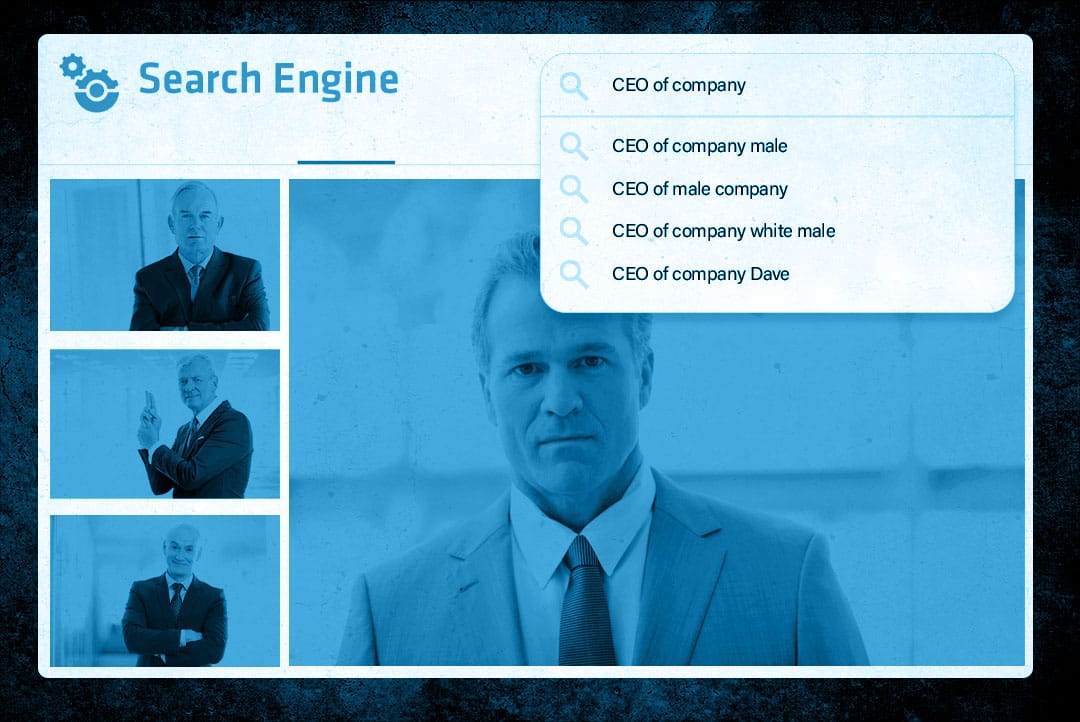

Mark Andrejevic: You encounter them every day in very common ways. So one of the things that I do with classes sometimes is, I use Google search, and you may have seen some of these auto-fill responses to Google search. So, when you're typing a search into the little search box in it, and it makes little suggestions for you.

Well, a few years ago, some people did some experiments, typing in things like "a woman's place", and then the auto-fill would be something like "is in the kitchen". And Google said, "Well, this is bias in the sense that it's sexist." And you could do the same thing with ethnicity categories, and you'd get very racist auto-fill responses. But Google's response was, "Yeah, it's a bias, but it's not in our system, it's in your society." And-

Dr Susan Carland: This is just because so many people have searched these words.

Mark Andrejevic: Exactly. And so they said, "All we're doing is reflecting back to you, your own biases." But that raises a kind of interesting question. What is Google's role, in that instance? Is it to reproduce our own biases on some mass scale? Or is it to do something else? You could imagine Google saying, "You know what, let's counter these biases in these search terms by, when you write "a woman's place is", have it finished, "anywhere she wants to be", and have some type of scripted responses that challenge the ingrained biases that Google is reflecting back to us.

Now it was caught in, I think, a little bit of a political jam there, because if it did that, it would be accused in some quarters of social engineering. Like who are you to decide what society should be. But I think the fiction of that charge is that it's social engineering anyway, right? There's no way out of it. The fact that it's taking data at scale, and extracting the biases from it and feeding those biases back to us is also a form of social engineering.

Dr Susan Carland: Right, because it's consolidating those biases.

Mark Andrejevic: It's consolidating and reproducing them. So either way, Google is... It's not like, if they do one thing, they're social engineering, and if they do another, they're not. So what they did was they punted. That's American expression. That's when you decided not to go for the pass, but you just kick it up in the air. And they just made it, if you try searching on gender and ethnic categories, now auto-fill won't work.

Dr Susan Carland: That's interesting.

Mark Andrejevic: And they just said, "Okay, we're not going to do that." But you can still find it in other categories that are maybe less obvious. And if you do things like image search, it still works this way. Type in CEO, chief executive officer, and do an image search. You will get more images of white male than are actually represented in the CEO core. It just has that bias built in. So these are very kind of basic fundamental ways in which you see bias.

A researcher at Princeton University, who's African-American, took a list of names that are historically associated with African-American, and did Google searches on them, and found out that they were more likely to get ads suggesting that they may have been in the criminal justice system or the prison system. She found this out, after she was Googling her own name and received these ads. And she thought, "Oh, I wonder why I'm getting those ads." And so she conducted this experiment.

I can give you one more example, which I think is an interesting one. Facebook was found to violation of anti-discrimination laws in the US, when it was discovered that you could target advertising to people, based on their ethnicity or their gender or their age. And for some categories of ads, that's in violation of civil rights laws. So you're not allowed to target jobs, for example, by ethnicity, or housing. "I want these ads to only go to certain ethnic group." You're not allowed to target jobs by age group. And, they were found in violation of civil rights law. And I had to pay a penalty for that, and claimed that they made it impossible now to search on these categories of ads, using protected categories of people.

But, it turns out that even if you don't specify a particular, let's say, gender category for your ad on Facebook, their algorithms might end up introducing gender bias into how that ad is distributed, because what their algorithms automatically do, is they go back and they look at which groups those ads have been most successful with. And if those... A particular ad, you don't specify gender bias. Let's say you're doing a job ad, and you don't say, "I want to target this to men or women or so on." The algorithm itself may discover that similar ads, based on graphics or text, have been more successful with men or women. And it may build in a preference that you didn't instal in the first place. So the algorithms itself, based on historical activity, can start to build biases into how information is distributed.

So there's just a few kind of everyday examples. And I could keep rattling them off. Things like facial recognition technology, we've heard, tends to be more accurate, at least, for many of the algorithms that they've tested, on lighter coloured skin tones, more accurate for men. So there's a kind of white male bias in terms of accuracy, in many of these algorithms. They claim they're working on that. But, it's a function of the data sets they work with, the people who create and test these algorithms, and who they imagine they're going to be exercised on.

Dr Susan Carland: So what are the consequences, if the issues of bias in AI aren't addressed or regulated properly? Here's Yolande Strengers. Do you think the way we do AI, the way we think about AI, the way we allow it to be produced in our society at the moment, do you think it's good enough?

Yolande Strengers: No.

Dr Susan Carland: Okay. So then let me take that question further. Imagine we progress down this path in the same way, in the same unsatisfactory way by what you think. What do we look like in 50 years? In a hundred years? In terms of the way AI is in our society?

Yolande Strengers: Well, to me, that is a almost impossible question to answer, because AI changes every beat. In the time we've had this conversation, someone will have changed, many people will have changed the algorithms on a device. As soon as you speak a critique of AI, it is changing underneath you. So I don't see any future where we can project out what we have now and expect that it will be the same in 50 years time. I guess, if we were to continue on a path of ignoring some of the issues that I'm raising around gender, and also this relates to racial representations as well, then I think we would just be culturally held back, socially held back in the sense that we wouldn't be allowing ourselves to really progress with things like gender equality and equity, because we'd be holding onto stereotypes from our past through technology and through design. And that would be pretty sad. But I mean, I don't think that's going to actually happen because I do see the industry changing, albeit sometimes very slowly on these issues. And I think that pressure from users and pressure from the community will lead to more progressive design as we go forward.

Dr Susan Carland: So how do you see it changing for the better?

Yolande Strengers: I think there's a number of things that need to change to make things better. One of the ones that often doesn't get talked about or thought about is representations of AI in sci-fi, and I think when you're in the industry and you're stuck in your own thought patterns, and even for myself, I've worked so long with digital voice assistance now, it's quite hard to think about and imagine alternative possibilities. Imagination is what we need, and it's not just about, as I said, just the voice or imagining a different personality for something that people are going to engage with that's still going to be liked, but maybe not in the way that we currently expect. I think that is what I want to see change, but it's going to take a lot of different players.

Dr Susan Carland: It's really interesting what you say about how often female or feminised AI is, when you drill down, it's about compliance. I will do what you want, whether that's sexually or in organising your life. And I can't help but think about Westworld. Have you seen that?

Yolande Strengers: Yes. That's another one of those inspirational films.

Dr Susan Carland: Yeah. And it wasn't until you said it that I realised, so many of the female AI characters in Westworld are there for sex. I don't remember a single mile robot being used for sex.

Yolande Strengers: No. I mean, they're enslaved in a different way.

Dr Susan Carland: Yeah, they get shot and the women are had sex with constantly.

Yolande Strengers: Yes. The women are definitely there to serve.

Dr Susan Carland: And compliant.

Yolande Strengers: Yeah, that's right. And that's where I'm saying that sci-fi has provided the cues for the type of femininity that we now expect from many of our devices.

Dr Susan Carland: So what happens if we don't have enough regulations in place for biases in AI? Here's Mark Andrejevic. So given that you don't think we have enough protections in place for biases in AI, imagine if we continue down the path we're on. What does our society's use of AI look like in 50 years, 100 years, if we don't do anything to correct these biases?

Mark Andrejevic: We're going to move more and more to automated processing of a growing range of decisions in our lives. And in some places, those are inevitable. It's not possible to have a search engine like Google without an automated system. You can't have humans going through X billion websites and figuring out what the best outcome is. I think increasingly more and more of the decisions in our lives are going to look like that, because we're generating more data. So it's going to be like, oh yeah, university admissions, we've now created so much data about possible job candidates that it's not processable by humans, because now we're able to get all of the social media data about people. This is my dystopian scenario. We're not doing this now, dear listeners. But now we're able to get all their social media data. We're able to get really detailed data from the schools that are keeping track of every quiz they take, every test they take, their performance on all of these. All of this data now becomes too much data to process by humans.

So I think one of the things that's really driving this, it's not just the decision systems, it's the data collection systems. So once you create interactive media that make it possible to collect more and more information, then every decision potentially becomes an increasingly data-driven decision. And the data is too much for humans to handle so we hand it off to automated processes to handle. So what's driving it in a way is this kind of data economy that we have even more than the automation one, if that makes sense. Although the data collection is automated too, but more than the decision-making side. Once you have too much data, then you have to defer to the machines. And if we look at our current trajectory, those machines are going to be the systems that operate them are going to be, on our current trajectory, commercial, non-transparent, privately owned and controlled. So that's a quite dystopian scenario that we get lost in a sea of data that private companies get to sort through in order to make decisions that shape our lives.

Dr Susan Carland: It seems that without proper regulation, we will continue to see biases built into our AI systems. Next week, we will take a look at some of the ways AI can play a positive role and perhaps even help us prevent biases in our society as we move towards the future. Thanks to all our guests. We'll catch you next week on What Happens Next?