Network congestion and underperforming broadband links have been a persistent problem for home users of internet-hosted services.

Before COVID-19 social distancing rules were introduced, most users didn’t have a “mission critical” need for a well-performing home service. Now they do, and problems that are annoying for typical home users become major impediments to working from home.

The large-scale use of teleconferencing and video-based communications tools such as Zoom, Hangouts, Skype, Facetime and many others has exposed a multiplicity of sizing and planning problems in networks at an international, national, regional and suburban level, but also stressed home broadband services in ways frequently not envisaged when these were developed and deployed.

The problem of congestion in networks has been well-researched since a series of “congestion collapse” events on varying scales occurred during the early life of the internet, when the interactions between computers connected to the network and the network itself weren’t well-understood.

All internet-based services are what are termed “packet-oriented”. Messages are sent by transmitting “packets” containing data, using a “protocol” that defines the format of the message, and the rules governing communications between devices in the network.

Data in whatever format is chopped up into small chunks, wrapped in multiple layers of protocol information, and launched into the network. Each packet travels through the network, addressing information in the packet being used to guide it to its intended destination.

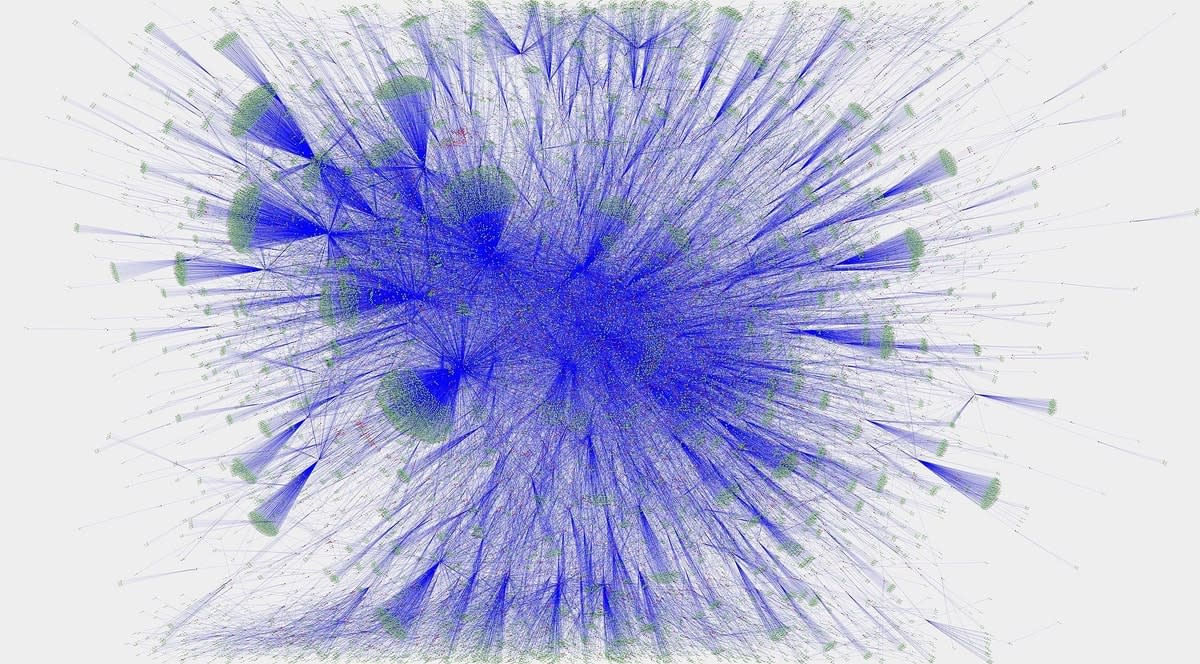

A mapping of the internet produced in 2016 using the BGP protocol that allows network routers to exchange information. This illustrates the immense complexity of the internet, and why messages often have to hop across a large number of routers to reach their destinations.

This is a terrible over-simplification, but captures an important idea – that network traffic involves flows of huge numbers of little packets containing data, each travelling from its source to its destination, like the flows of peak-hour road traffic in a city central business district.

This analogy is intentional. Internet networks, like road networks, must provide a route for packets to travel from their source to their destination, just as cars need a route through the road network to get from one place to another. Road networks are made up of roads that interconnect intersections, allowing drivers to find a path to their destination.

Internet networks use fibre, copper or radio links to carry packet traffic, just as roads carry automotive traffic, and use devices called “routers” or “switches” to guide the flow of packets through the network, just as intersections with traffic lights allow drivers to find the intended route through the road network.

The pain of traffic congestion

We’re all familiar with peak-hour congestion in road traffic. Congestion in networks is no less painful. If the volume of packet traffic is too high, packets saturate routers and switches, and the flow of traffic though the network slows or grinds to a halt.

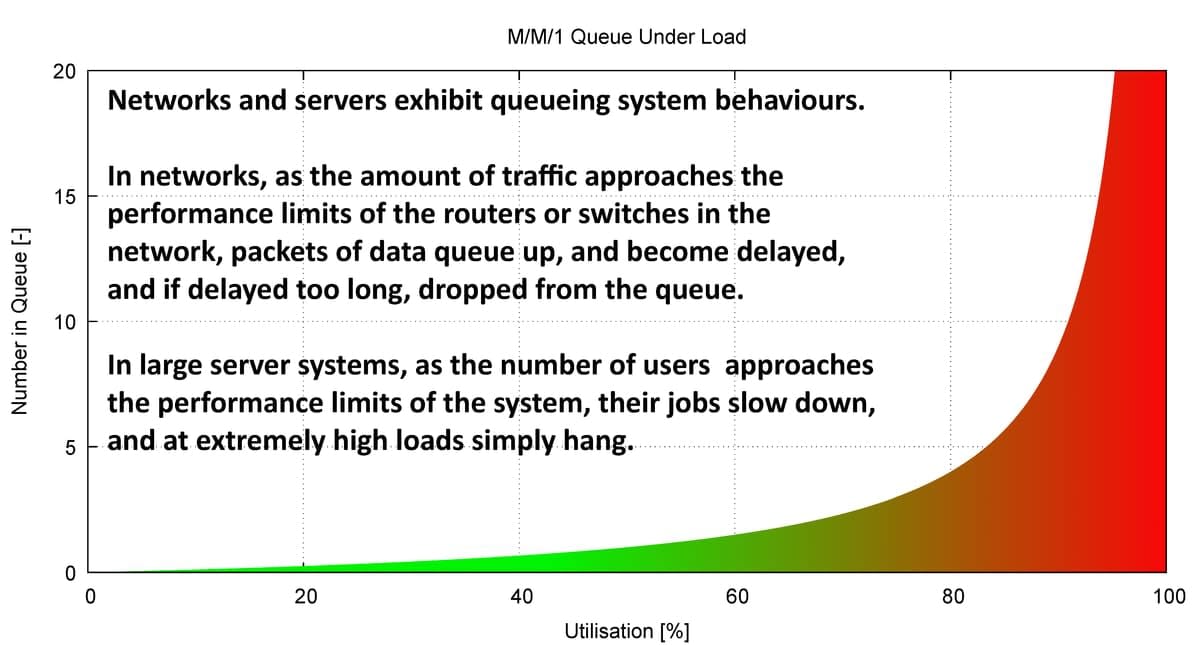

This behaviour in both instances arises because cars queue at intersections, and network packets queue in routers or switches. We can model this behaviour with mathematical queuing theory, which also explains the congestion collapse problem in either example. As either of these “queuing systems” becomes overloaded with traffic, delays increase disproportionately, and for many such systems, become infinite at the maximum load.

What we’re seeing now in our international, national, regional and suburban-level networks is an immense traffic jam.

The problem is further compounded by overloading of the large server and cloud-based computer systems providing many shared services.

Performance in these computer systems is also mostly determined by the very same mathematical queuing theory, and as they become overloaded, performance can degrade – often catastrophically. The embarrassments of the recent census and MyGov congestion collapse events are case studies – if the servers and cloud systems aren’t sized for a heavy load, they’ll slow or collapse just as networks do.

The problems we’re seeing with most or all of the teleconferencing software tools can all be explained by considering the excessive load and congestion we’re now seeing as a result of unplanned traffic loads on networks, and computational loads on the centralised servers these software tools employ. The results are observed in data packets being delayed or even dropped, disrupting operation of these tools severely, or even catastrophically. Video and voice are considered to be “inelastic” traffic that doesn’t tolerate network delays.

Streaming video traffic is a major factor, as it’s what network planners colloquially call a “bandwidth hog”. For comparison, Zoom’s recommended bandwidth for video is about nine to 23 times greater than a high-quality VoIP phone channel (G.711). Netflix’s recommended bandwidth for standard definition (480p) is about 47 times, high definition (2K/1080p) about 78 times, and ultra high definition (4K/2160p) about 390 times greater than a voice channel. This is why Netflix is temporarily restricting its services to standard definition.

In many ways, the current network congestion problem fits the classic “tragedy of the commons” scenario, the network being the global and local commons.

While the congestion problems at a network level can be fixed by providers upgrading links, routers, switches, servers and cloud platforms to provide more capacity, this can take between days and months to implement, compounded by genuine supply chain delays at this time.

How can we ease the problem?

What can we do as users of teleconferencing tools to cope in the meantime? That depends on the severity of congestion, and how many network users are prepared to sacrifice the quality of their service for the common good.

The first thing we can do is use video sparingly, only for essential tasks such as screen sharing. Reducing video picture resolution helps, and avoiding the use of full HD (1080p) helps more. This won’t put the proverbial dent into the massive bandwidth demands by idle and bored home users of streaming video services, but is less likely to mean a session dropout or degrade.

What we’re seeing now in our international, national, regional and suburban-level networks is an immense traffic jam.

When the network is congested, falling back to one-to-one sessions rather than multiuser conferencing can also help. Sophisticated conferencing modes in these tools rely heavily on synchronisation, and control messages between users and servers, and if these are delayed or dropped, sessions can hang or become otherwise disrupted.

Finally, measuring network congestion to find the worst times during the day can be used to find periods of lower congestion to schedule teleconferencing sessions.

Creative improvisations such as these will remain the best choice in the short term, until we see major capacity upgrades by service providers.

Before joining Monash University, Dr Carlo Kopp was a computer systems and network performance engineer in the computer industry. He’s conducted research in various areas of network performance modelling since the 1990s.