Monash University’s thought-provoking podcast, What Happens Next?, kicks off its ninth season with one of the most pressing issues of the day – the impact of artificial intelligence on our perception of reality. Hosted by academic and commentator Dr Susan Carland, the season premiere brings together a multidisciplinary group of experts to better-understand the quickly-shifting landscape of AI and emerging technologies.

As AI rapidly evolves, our understanding of truth, human interaction and society is being challenged like never before. Today’s episode guides you through this intricate web of technological advancements and their far-reaching implications, offering enlightening – and cautionary – insights.

Reality’s blurring lines

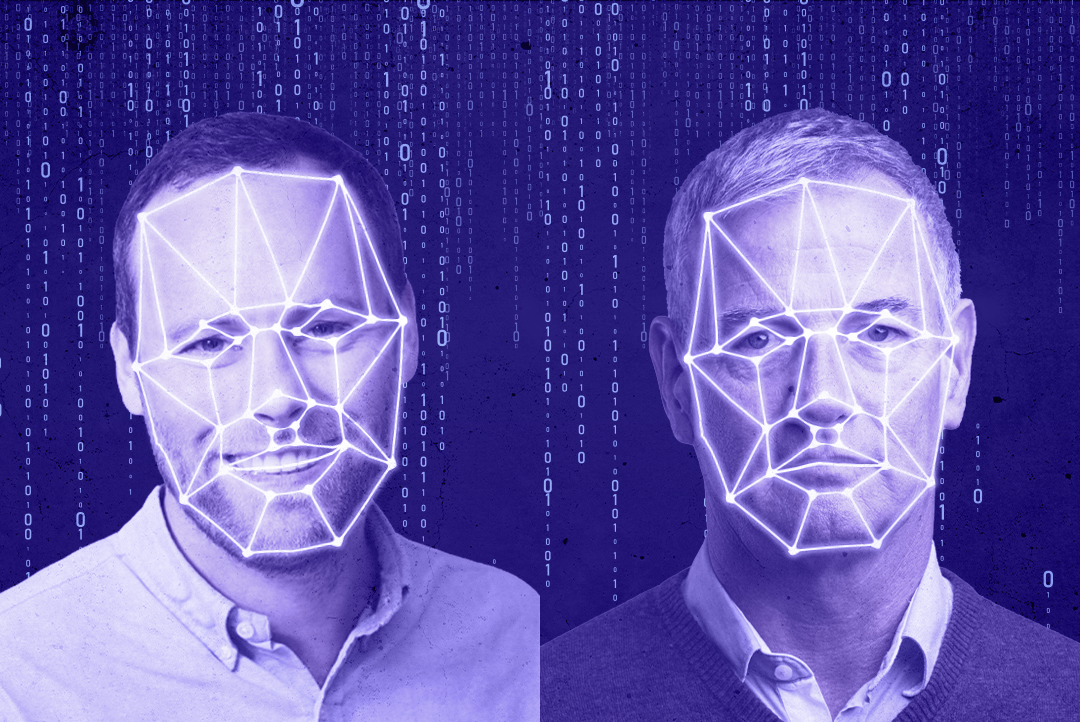

As AI-generated content becomes increasingly sophisticated, distinguishing fact from fiction is growing more challenging. Professor Geoff Webb, a world-leading professor of data science and AI in Monash’s Faculty of Information Technology, notes that AI’s current capabilities “simply didn't exist five years ago”. This rapid advancement has led to a world where even the evidence of our own eyes may not be trustworthy.

The rise of deepfake technology, in particular, poses significant challenges. Futurist Dr Ben Hamer, host of the ThinkerTank podcast, warns that deepfakes can be “so sophisticated that it can be really quite deceiving”, potentially influencing everything from our democratic systems to our daily interactions.

“There's a statistic that says that more of Gen Z feel they can be their most authentic selves in virtual worlds than in the real world. And that's the first-ever generation to say that.”

– Futurist Dr Ben Hamer

Redefining human-AI interaction

As we grapple with these changes, we’re also rethinking our relationship with technology. Professor Joanna Batstone, Director of the Monash University Data Futures Institute, believes we're “relearning what the interaction of human and computer looks like in this next generation of artificial intelligence”.

This shift extends to robotics, too, as humanoid robots become increasingly accessible and capable. Sue Keay, Chair of Robotics Australia Group, discusses how advancements in AI are making it possible for robots to understand and respond to increasingly complex instructions, creating enormous potential for the use of these machines in our daily lives.

The psychological impact

The psychological effects of these technologies are profound. Keay says humans respond differently to humanoid robots than to other forms of technology, often viewing them as more neutral and objective. This perception can lead to a phenomenon known as “robotic nudging”, where robots can influence human behaviour – in both positive and potentially concerning ways.

“You are much more likely to take advice from a humanoid robot than you would be to take advice from your phone or your iPad, or indeed from another human,” she says.

Even more striking is the emergence of AI companions. Ben Hamer points out that these entities are already being used for everything from mental health support to spiritual advice. Not only that, younger generations often feel more comfortable interacting with AI than with humans on sensitive topics.

Listen: Are Our Machines a Little Too Human?

Navigating an ethical minefield

The nefarious use of emerging technology keeps hitting the headlines, and for good reason. In the political sphere, the potential for AI to distort reality during elections is a growing concern. Ben Wellings, an associate professor of politics and international relations in Monash’s Faculty of Arts, highlights how AI-generated content can create confusion that can be used as a tactic of political theatre.

Associate Professor Stephanie Collins, Director of Monash’s Politics, Philosophy and Economics course, adds another layer to this discussion, highlighting how social construction plays a critical role in our shared reality. She explains that while some things exist independently of human perception (like planets and rocks), other crucial elements of our society – including money, elections and political power – exist because of collective agreement.

This becomes particularly relevant when considering how AI and misinformation can shape these social constructs. As Collins notes, even if a conspiracy theory isn’t based in fact, the widespread belief in it creates a real phenomenon that carries genuine social and political impact, requiring engagement rather than dismissal.

The use of AI and humanoid robots in areas such as aged care and early childhood development raises additional questions about the balance between technological assistance and human interaction.

‘What if things go right?’

Despite the challenges, there's room for optimism. As we navigate this new landscape, critical thinking and digital literacy will be more important than ever. And by understanding the capabilities and limitations of AI, the episode guests agree that we can harness its potential while mitigating its risks, shaping a future where humans and AI coexist in ways that enhance rather than diminish our reality.

Listen: Are Humans About to Evolve?

The conversation about AI, reality and human interaction is just beginning. As these technologies continue to evolve, our approaches to understanding and engaging with them must evolve, too.

Next week on What Happens Next?, Susan Carland and her guests will examine the many ways we can work towards a future where technology enhances the human experience rather than detracting from it. Don’t miss a moment of season nine – subscribe now on your favourite podcast app.

Already a subscriber? You can help other listeners find the show by giving What Happens Next? a rating and review.

Transcript

Joanna Batstone: So I think we're relearning what the interaction of human and computer looks like in this next generation of artificial intelligence. We are rethinking, what does human-robot interaction look like? What does human-AI system look like? And what role do humans play in that future world?

Geoff Webb: I think it's human nature to be scared of the unknown, and our ability to predict what the good and bad aspects of the new technology will be.

Sue Keay: I don't know that it's blurring reality so much as potentially making us have to focus on what it means to be human.

Susan Carland: Welcome back to What Happens Next?, the podcast that examines some of the biggest challenges facing our world and asks the experts, what will happen if we don't change, and what can we do to create a better future? I'm your host, Dr Susan Carland.

We are standing at the threshold of a future that looks radically different from anything we've imagined just a decade ago. While innovations such as generative AI, extended reality and deepfake technologies offer exciting possibilities, they also present serious challenges. The potential for misuse is alarming with far-reaching consequences that are difficult to fully grasp.

[Radio static]

News presenter 1: AI creations used to be garbled and incomprehensible, but now it's hard to pick the fake images from the real world.

[Radio static]

News presenter 2: Images of Australian children have been found in a data set used to train AI.

[Radio static]

News presenter 3: I think everybody needs to move faster than what we are because the technology is moving faster than anyone could reasonably expect it.

Susan Carland: Perhaps the most concerning is how these technologies are blurring the line between what's real and what's fabricated. Are we losing our ability to distinguish fact from fiction? Keep listening to find out what happens next.

[Music]

Susan Carland: Stephanie Collins is Associate Professor of Philosophy and Director of the Politics, Philosophy and Economics degree at Monash University, where she works closely with Ben Wellings, Associate Professor of Politics and International Relations.

Stephanie and Ben, welcome to the podcast. I want to start by asking you both to define reality in your respective field. Steph, let's start with you.

Stephanie Collins: Nice, small question to start with. So philosophers would normally define reality as something that exists or is the case, independently of any one person's opinion or perception or subjective experience of whether that thing is the case. Now, that's going to get complicated when it comes to things that are socially constructed. We might get to that, but as an intuitive starting point, you can think about planets or rocks, right? Whether or not there's a black hole on the other side of the universe, that's true or false independently of what we think about it.

And I think what philosophers have always emphasised with the idea of reality is that humans have always had and always will have a really imperfect relationship grasp of reality. So 2,500 years ago, the Chinese philosopher, Zhuangzi, has this really famous passage where he talks about waking up from a dream where he dreamt that he was a butterfly. You probably know this. And he goes, he says, “Was I a man that dreamt of being a butterfly or am I now a butterfly that dreams of being a man?” And his point is like, you can’t tell –

Susan Carland: Mind-exploding.

Stephanie Collins: Right? Mind blown! And we think, “Oh, of course, you can tell whether your dream or reality –”

Susan Carland: But can you? We don't know.

Stephanie Collins: Well, so lots of philosophers today think there's a really good chance that we are living in a computer simulation.

Susan Carland: Yes.

Stephanie Collins: Because if some sort of intelligent creature got to the point where it could create computer simulations that included conscious beings, they would have lots of reasons to do so, and they would probably run tonnes of these simulations for all sorts of reasons. And we could be living in one and we wouldn't know. So yeah, reality is what exists independently of us. We have really poor access to it, and that has always been an issue.

Susan Carland: That's kind of what The Matrix was talking about, right?

Stephanie Collins: Yeah, very much so.

Susan Carland: Yeah. I guess the question is does it matter?

Stephanie Collins: So I think lots of philosophers think it doesn't matter, but it matters when we start thinking along lines like the following. When we start thinking, “Oh, well, we have really imperfect access to morality. We can't tell if we're living in a simulation. Hey, so it's all a matter of perception. It's all a matter of opinion. And so I can make up my own alternative facts.”

So when we start thinking that we shouldn't be trying to gain better access to reality or we shouldn't at least be trying to reach some sort of human consensus on what's real, that's when it starts being a problem, I think.

Susan Carland: Yes. Alright, Ben, top that. Are you a man or a butterfly? First question.

Ben Wellings: I can't tell anymore.

As a political scientist, which is neither man nor butterfly, I don't think political scientists spend initially too long thinking about what reality is. There's just what's in front of them and it's the things that they like to analyse and they are quite happy going about doing that independently of anyone else, and that's all fine.

But I think that the reference to alternative facts is where political scientists might start to engage with this because there seems to have to be some sort of consensus over what matters, would be one way of putting it. And we could make a list of the things that matter. And that could be parties, it could be institutions, it could be just communication.

But at the same time, there have been various moments in history where consensus over what matters breaks down. That means there are different political realities that are not necessarily in the philosophical sense, but in the sense of agreed things that are important to politics.

And I suppose the thing that we most would think about would be, say, the insurrection in the US, which seemed to be two groups of people who understood the material world very, very differently given information that was coming to them, that they had been internalised, that was responding to things they already felt and believed.

Along with a huge externality, as our colleagues in economics might say, which was the pandemic and the responses to the pandemic, which became contentious because of the different social realities that people seemed to be inhabiting, and that led to some really quite unique outcomes.

Stephanie Collins: Well, this is where social construction comes into it, I think.

So here's the issue. There are some things like planets and rocks and black holes that exist independently of what we think. And then there are some things like money and elections and Prime Ministers that exist only because we all agree that they exist. So whether a piece of paper or a coin counts as money depends upon how we all treat it, depends on our social practices. Some philosophers think whether we treat it as existing can make a difference to whether or not it actually exists.

And so it matters for things like political power in elections because we can make something exist by treating it as if it does.

And I think that sometimes what you see in say, conspiracy theories, of course, the conspiracy theory perhaps isn't real, but the fact that everyone believes it creates a real phenomenon, not the actual content of the conspiracy theory, but it creates a real phenomenon that then has causal power and has to be responded to. Can't just be written off as nothing, has to be engaged with because yeah, it has an impact on people's lives.

Susan Carland: Let's quickly define a term that's going to come up a lot in this conversation, ontology. In simple terms, ontology is the study of what exists and how we categorise different types of existence. It's about how we understand and organise our perceptions of reality.

And I feel like at the moment, we're in such a unique time where the amount of those things, social, other people, institutions, the media, whatever, that are trying to tell us what is real or what isn't or how to be in the world, we've never had more input coming at us to try to sculpt that definition of reality for us.

Stephanie Collins: There's a lot of competing ontologies at play in our politics.

Susan Carland: Yes.

Stephanie Collins: Everyone has a different list of what exists and it's a problem.

Susan Carland: Have we ever been in a time where we've had that many competing ontologies?

Ben Wellings: Yeah, I'm not sure about that. In quantitative terms, maybe not, but I think it's about the novelty rather than the number.

Susan Carland: Okay.

Ben Wellings: Let's put it that way. The thing that always springs into my head, this is not directly political, but you might remember there's a very famous photograph of the Loch Ness Monster, and you probably remember the one. And when we look at it now, it's obviously like a little toy submarine with a long neck and a head stuck on it that someone's floated and taken a photograph of. But at the time, that was quite convincing. People were taken in by that.

But I think the point that I want to make though is that the media matters here. And so I think what's new about what you're saying about the sheer volume of ontologies that are coming at us through our phones, I'm wondering if that is just a function of the novelty of this type of media.

Remember Orson Welles' War of the Worlds? And people panicked at that dramatisation of the book, and you can go back to the French Revolution and there are broadsheets with satirical cartoons that spark off rumours, that spark revolutions. I'm not 100 per cent convinced that there's something so unprecedented about this moment that we are living through just because of social media.

From the political point of view, I think that trust is really important. So there's something about trust and reality, if we trust it's real. And I think that's really perhaps what the political version of reality comes down to. And I think that all of the things that you've both been describing have weakened that sense of trust.

There used to be synchronicity, didn't there? Everyone watched... Not everyone, but a lot of people would watch the same thing at the same time and they'd get the similar sorts of information. I think that's where the consensus over what matters that I was describing at the outset was, and then maybe the distinction here is the narrowcasting rather than the broadcasting means that –

Susan Carland: Yeah. That's what I was going to say.

Ben Wellings: That we've got lots of different ontologies coming at us.

Susan Carland: That's exactly right. That, coupled with the inundation.

I think if we think about the Loch Ness Monster flipper, you might see that and everyone shares that and turns up in the newspaper, everyone's looking at the same newspaper, whereas the phone in our pockets, we are being narrowcast to.

What I see on my feed and what comes up in the weird WhatsApp groups I'm in is probably very different to yours and what is considered truth, and, “Have you seen this video about what's happening here and there?” And you'd be like, “I've never seen that. I've never heard of it.” It's being inundated and it's fractured now.

Stephanie Collins: I think it's a bigger scale now for sure, but to some extent, humans have always had to decide who to trust, who our sources are. And for me, that's the question. The question is who should we trust? Who are the reliable testifiers? Who are the reliable sources in the environment today? I think it's really hard for any of us to assess that.

[Music]

Ben Hamer: Hi, my name is Dr Ben Hamer. I am a futurist and the host of the ThinkerTank podcast.

Susan Carland: Ben, thank you so much for joining us today. I want to start by asking you if you think we will get even worse, or we will struggle even more to distinguish reality from fake, as AI gets better and better into the next decade?

Ben Hamer: I think where we're finding ourselves at the moment is in what we call the post-truth era, and that's a time where we care more about what feels true to us rather than what the actual facts are.

We've seen this with conspiracy theories, with conversations and debate about vaccinations. Donald Trump coming out, slamming everything as fake news, where we don't necessarily go to what science or what evidence-based research suggests, but rather, if it feels true to us, therefore we take it as truth.

And so that in and of itself is a really interesting look at this whole misinformation landscape that we find ourselves in.

You then throw technology in on top of it, and it becomes even more confusing, particularly with the rise of AI-generated content with technologies like deepfakes.

So we saw, I think the most famous one was the Pope with that really oversized puffer jacket. We've seen the Met Gala earlier this year where images of Katy Perry and Lady Gaga were circulating, and people didn't really stop to question whether or not it was fact or fiction because it was harmless, it wasn't malicious.

But naturally, we can expect more harmful consequences to come from that. And yeah, I think it will be increasingly more difficult into the future to determine between what's real and what's fake.

Susan Carland: And are there specific technologies that are emerging that seem to do a better job of tricking us or fooling us or creating impressive fakes than others?

Ben Hamer: Yeah, I think it is deepfake technology more than anything else. You do have augmented reality and virtual reality and others, which can blur the lines between physical and digital worlds. But really, it's deepfake, AI-generated content, which is so sophisticated that it can be really quite deceiving.

In the past, we've seen a deepfake of Barack Obama. So taking a former US president and people weren't able to determine whether that video was real or not. And you think about the potential application of that, whether it's around elections and encouraging voters to vote a particular way because we know that celebrities and influential people have the absolute ability to do that, particularly in US politics. It is going to be quite profound and not necessarily in all the good or right ways, but it will be deepfake technology that will be the most convincing for sure.

When you're presented with something, you look at all the different indicators as to whether or not it could be authentic. And if it's just text-based, well, then there are so many questions that you can still ask as to whether or not who was behind writing that and its authenticity. But when you have someone's face to it and their likeness, when you have not only their voice but their style, their tone, their intonation, all of that comes into play.

So even if they, every now and then like to crack a joke, then the deepfake is able to do that as well. It really does become quite difficult to discern whether or not it is the real person or a deepfake mimicking their likeness.

Susan Carland: Professor Geoff Webb is a world-leading expert in data science and artificial intelligence at Monash University. It feels like AI is changing really fast at the moment. Can you give a top line overview for the average punter at home on how you've seen generative AI change in the past, say five years?

Geoff Webb: Oh, well, it's extremely simple. The current capability simply didn't exist five years ago. In fact, if you'd asked me five years ago, “Would an AI system be able to hold a conversation with you on an arbitrary topic in a convincing way?” I would've said no. And the systems have just suddenly taken off, and it's a result of the massive scale of the systems that they're now able to produce. And with that scale comes extraordinary capabilities.

Susan Carland: How do you see AI-generated content affecting our perception of reality?

Geoff Webb: This is a really interesting one. It's now possible to produce images that are so convincing so easily that everyone I think needs to be very careful about even believing the evidence of their own eyes.

Susan Carland: How do we live in a society like that though when we can't trust for certain that the things we're seeing are true, when there is no shared reality?

Geoff Webb: So I think those are two slightly different things. So the first one is we've never really been able to trust images because images, A, have for a very long time been tampered with. It's only not been possible for the average punter to tamper with images in the same way. So that's one thing, but also, just the way a photographer frames a picture and exactly what they choose to take a picture of also frames the story. So it's really not a new thing. It's just on a bigger scale.

Susan Carland: It is on a bigger scale, and it's not new, but it's one thing to see a photo that a journalist has taken and go, “Well, you left out the very important aide truck that was next to that starving person,” for example. It's another when an entirely fake video of the leader of a country can be created saying something that could start a civil war. How do we as society, how do we catch up? How do we deal with this?

Geoff Webb: I actually don't know the answer to that, but I'm again not convinced that it's totally a problem with the technology. I think that people are already willing to believe that a leader of a country has said something without needing to see the video, and the opposition just needs to say they've said it, and people will ought to all too willingly go down that path. So I think social media and the ability to broadcast messages widely is a large part of this. It's not just the AI systems' ability to generate convincing images.

Susan Carland: How good are we at detecting AI, do you think, as humans?

Geoff Webb: Not very good at all. I think we're very easily misled.

Susan Carland: And do you think that will only increase how bad we are at it, as AI gets better?

Geoff Webb: One of the things about the AI systems is that they're able to learn from observation. So they're able to monitor the extent to which they're detected and learn what it is about what they do, which is detectable and learn not to do it.

Susan Carland: And when we engage with Claude or ChatGPT and say, “Can you write, summarise this document I've written?” And it gives you a suggestion. You write back and go, “No, I want it more like this.” That is us teaching it. We are helping it to get better at its job.

Geoff Webb: Absolutely. Absolutely.

[Music]

Susan Carland: Professor Joanna Batstone, Director of the Monash University Data Futures Institute, says that the huge strides in AI are making us reconsider our relationship with technology.

Do you think that AI is changing the way we perceive reality or how we understand reality?

Joanna Batstone: It's a fascinating question because we've been using AI tools around us for years now, and it's almost commonplace to think about using an AI-assisted tool. But with this massive explosion of interest in AI over the last 18 months, as we think about fake news, videos, photographs, images, text being generated by generative AI, it's really causing us to think through, “Can I trust this content? Is this content created by AI? How do I feel about whether this content is created by AI or not?”

And so I think it's changing that dynamic of us thinking about our perceptions of reality, but it's also causing us to reflect on do we like it or do we not like it? And are there use cases where it's perfectly okay to have AI-generated content and other use cases where we're much less comfortable? And that comfort zone is often very much in the area of perspectives of privacy or human rights, and also respect for the individual.

So I think it's changing the way we think about reality, but we also have become very comfortable with using AI tools in our everyday work and lives.

Susan Carland: That's such a good point. And I wonder, like you said, we have been using AI for a long time and not really thinking about it. Spell check, for example, is one perfect example that we all happily use whenever we use the computer or on our phones when you're sending a text, we're often very glad that spell check intervenes or sometimes not.

And now we've tipped into something else where people are like, “Hang on a minute. I don't think I feel okay about this. I don't know, is this real? I feel like this has crossed a line.” What do you think or why do you think we're at this moment now where there is a discomfort that wasn't there before? What's triggered that?

Joanna Batstone: I think the discomfort that's been triggered is this realisation that AI is so good right now, that the technology has reached a tipping point. The compute capabilities of modern-day computers, the data sets that we have access to, the algorithms, AI really does feel to many people incredible that it can do so much. And so that tipping point is causing us to then reflect on what will the future look like? Will AI ever take over and become this general artificial intelligence? What role do humans play in that conversation around AI?

So I think we're relearning what the interaction of human and computer looks like in this next generation of artificial intelligence. We are rethinking what does human and robot interaction look like? What does human-AI system look like and what role do humans play in that future world?

[Music]

Susan Carland: The rise of AI has us reconsidering the rise of robots, too.

Sue Keay: My name is Sue Keay, and I am the Chair of Robotics Australia Group, and also a Director of Future Work Group, and really passionate about the difference that robotics technology is likely to have on workplaces and also on society.

Susan Carland: Sue, welcome to the podcast.

Sue Keay: Yeah, thank you very much for having me.

Susan Carland: Can you start by telling us what are humanoid robotics?

Sue Keay: Oh, I'm glad you asked. Humanoid robots, it's really just a term to describe the form factor of particular robots. So they're robots that walk on two legs, have two arms, have a head, and look in many respects in the same form as a human, although in most cases, they're very recognisably not human. Up until recently, humanoid robots have seemed a little bit out of reach.

So just to give you an example, I think we probably only have one humanoid robot in Australia at all. They tend to be very expensive, often in the range of millions of dollars. But what we've seen happen is a lot of reductions in the price of many of the components that go into building robots.

And we've also seen, as many people would be aware, a huge growth in the opportunities for applying artificial intelligence, which makes robots much easier to use than they have been in the past.

And given that combination of factors, we are now seeing many companies in the US investing significant amounts of money in humanoid robots. And so I think this huge investment that we're now seeing in humanoid robots will be really interesting in terms of how it is able to make those robots much more cheap, much more accessible to everybody so that there's not just one in Australia, maybe we'll see many.

Susan Carland: Is there anything that the humanoid robots are able to do well at the moment in either in homes or in factories or workplaces?

Sue Keay: There are experiments of them folding washing. I'm not sure I trust them with my washing at the moment, but just actually being able to pick things up and put them down without you worrying that they're going to break glass, that's actually a very complex problem for a robot to solve.

But they can now, thanks to large language models that power things like ChatGPT, we are now at the point where you can give verbal instructions to a robot and have some hope that they'll be able to understand what you're saying. So you don't have to be able to program to be able to communicate with your robot. That's another big step forward.

There are some pretty cool videos on the internet at the moment, showing a robot. Someone says that they're hungry, so the robot picks up an apple from a fruit bowl and passes that to their human.

Susan Carland: That's really interesting because that's quite high-level thinking. It's not saying, “Pass me the apple.” It's just giving it information that it then needs to deduce something from and then put together an action for that.

Sue Keay: That's exactly right, and that has been a challenge up until very recently that artificial intelligence was not able to solve, but that we're getting much closer to being able to do. So very open-ended questions like, “Bring me a spoon,” have been extremely complex for robots to try and reason their way out of because you have to be able to know, one, what a spoon is. That's fine, but where in a house would someone keep a spoon?

But thanks to a lot of these advantages in AI and the training up of these models is now making it much easier for robots to at least pretend they understand what's going on in the world. Whether or not they have true understanding is another matter.

Susan Carland: Which to be fair sounds just like me, so they're actually becoming more human by the minute.

[Laughter, music]

Susan Carland: Do you think our interaction with these humanoid robots will change or impact the way we think about what is real and reality?

Sue Keay: Oh, 100 per cent, because we haven't really got into cybernetics at all. But one of the other areas that is developing very rapidly is around implants. So essentially, we're bringing machines into our bodies, which makes us cybernetic organisms. But then you end up having to ask the question of where do you draw the line between what is a human and what is a machine when we're starting to incorporate machines into our bodies?

So I think this whole journey that we're going on in terms of what robots mean in our lives, really, I don't know that it's blurring reality so much as potentially making us have to focus on what it means to be human.

Susan Carland: What do we know about the psychological impacts of humans interacting with humanoid robots? How do we feel about it?

Sue Keay: Unlike any other type of technology, because robots are what we call embodied technology, which means they often have a shape that resembles a human to us, we respond to them psychologically in a very different way to how you would respond to your phone or to an iPad.

And so for example, you are much more likely to take advice from a humanoid robot than you would be to take advice from your phone or your iPad, or indeed from another human.

Susan Carland: Ha!

Sue Keay: Because these humanoid robots, and perhaps this is also because of popular culture, are seen as being very neutral and objective. So if you think of Data on Star Trek, you asked a question and they'll give you the response that you expect in a very scientific, genuine way. But unfortunately, people don't appreciate that robots are actually only as objective as they are programmed.

But what it does lead to is a phenomenon that we call robotic nudging, so that you can use a robot to give human cues that direct the behaviour that the human is exhibiting. And this can be good things. So you could use a robot to make suggestions that might make someone make healthier lifestyle choices, for example. But it could also just be used to sell you stuff, right?

Susan Carland: You are obviously a much more positive person about human nature than me. My first thought was that it could encourage people to commit crime or do something far more nefarious.

Sue Keay: I guess it depends on who's programming the robot.

[Music]

Susan Carland: Here's futurist Ben Hamer.

What is an AI companion? Is that the thing that when I try to talk to my mobile phone provider or my gas company, it's that annoying pop-up that asks, tries to help me and just infuriates me? Or is it a different thing to that?

Ben Hamer: Yeah, I love how they give it a name so that you don't get infuriated.

Susan Carland: Yeah!

[Laughter]

Ben Hamer: They're like, “You're talking to Jessica,” and it's like, “Jessica's still an annoying chatbot.” Yes. So that's a chatbot.

Susan Carland: Okay.

Ben Hamer: So chatbots are very transactional. They tend to be around to help you solve a specific issue or an inquiry that you might have, whereas AI companions are a little bit different. So they're intended to develop an ongoing relationship with you. They're essentially there to get to know you.

And so when it comes to how they're necessarily or how they're being used, so AI companions being there to simulate human companionship, they're being used in as far as mental health is concerned.

So a lot of stuff… We have a massive mental health crisis. We have a shortage of practitioners and clinicians. A lot of stuff can actually be what we would call very low level mental health support. And sometimes it's just someone who needs to be there to be at someone to be an active listener, and AI companions are able to do that and to play that role.

We're seeing them being used in the form of personalised tutors in education. We're seeing them used as religious and spiritual advisors. We're seeing them used in relationships.

You're not going to necessarily see a church pushing an AI spiritual advisor onto you or a psychological practice pushing an AI mental health, AI companion onto you. It's more so for individuals who might not feel comfortable going into a church or who might not feel comfortable picking up the phone and making an appointment or having to talk face-to-face with a human.

Because what we do see, and one of the benefits of this kind of technology is that particularly younger generations feel much more comfortable, much more authentic, either in virtual worlds or engaging with technology than talking about some of these really curly and insensitive topics with another human.

Susan Carland: That's astounding when you think about it, how this could be changing the way we do human interaction, and even what it means to be human, that if we would actually prefer to speak to AI about these things than another person. What do you see as being the ramifications of that for social interaction?

Ben Hamer: There's a statistic that says that more of Gen Z feel they can be their most authentic selves in virtual worlds than in the real world, and that's the first ever generation to say that. So they feel they can be more authentic as an avatar in the metaverse than as a human in the physical and real world as we know it.

And a lot of older generations, so predominantly Gen X and baby boomers who hear that are astounded, they're disappointed. Some get incredibly frustrated by it because they go, “Well, they're diminishing their social capabilities. They don't know what they're missing out on. They're just going to be lonely and isolated.”

But then conversely, I say for those younger generations who are doing that, they would say that they've never felt more seen and heard because they're able to connect with their community and find their tribe and find their people in a way that they probably were never able to or can't in the physical or real world.

And so it really just comes down to the way in which you approach the question and the issue. Do I think that it will impact social interaction and those social skills? Yes, but will those social skills be the same as they are today in 10 years' time or because of virtual and digital environments? Will they be different? I think so as well.

Susan Carland: Here's technologist Joanna Batstone.

How do you see this role of human-like or human-esque AI evolving, and how do you think we are grappling with that?

Joanna Batstone: Two areas that I see as very active areas of research at the moment, one is around robotics and human-robot interactions as it relates to aged care facilities. Can we enable robots to assist in an aged care facility? And what does that style of human-robot interaction look like?

And another big area of research is in the younger generation as well, early childhood development. There's a proliferation of AI-based toys today, increasing numbers of AI toys on the marketplace that look to enable the child to develop personalised learning experiences or personalised education.

And again, in both of those scenarios, the elderly and the youth of today, how do we ensure that we've still got enough human interaction and social engagement even if there's assistance from an AI-enabled companion or an AI-enabled robot? It's not just a physical machine, but it may also be a software companion, but ensuring we've got that balance between human and machine, and again, those human emotions of empathy and sympathy and nurturing. How do we ensure that we've got that balance right in those different styles of populations?

Susan Carland: It's funny. As you talk about them, I feel this instant knee-jerk reaction of like, “Oh, I don't like this.” I don't like the thought of elderly people in a care home, having a robot come and give them their medicine and their food. Something about it makes me go, “Oh, this feels like a terrible development.”

But I guess I also want to be conscious of the knee-jerk reaction. Is that reasonable? Is that fair? Could this actually be a great assistance? Why am I having that knee-jerk reaction?

Joanna Batstone: Yes, it is a knee-jerk reaction that many people will have, and it's a very complicated and nuanced conversation. Ethically, the conversations we have are around, as you just said, is it appropriate to remove that personal interaction and replace it with a machine?

Susan Carland: And it's hard because we can't foresee what will be the consequences of this. The very great outcomes of the printing press, for example, are clear to see, and I'm sure some negative ones as well, but in this moment, we cannot see the impact of bringing in helpful robots into their aged care facility, for example, in other places.

So it's a tricky one to know, are we on the precipice of something very great and useful or something that we'll look back and go, “What did we do? Why did we think this would be a good idea?”

Joanna Batstone: Yes. So technology changes over the years have always prompted these questions. The Industrial Revolution, even post-war, reinventing the kitchen with all of these electronic appliances. Right? Every time there's a new introduction of technology, it causes us to reflect on why is it changing and do we like the changes that it's causing us to adopt?

Susan Carland: Here's Professor Geoff Webb.

Geoff Webb: I think that it's a common story with all technological step forwards. So when cars first began to emerge, people were terribly worried about it, and their worries were that we're going to throw up dust and make the countryside all dusty, and that they were going to scare the horses, both of which turned out not to be the things we should have been worried about. Lots of other terrible effects of the technology, such as the development of widespread suburban sprawls with people with poor access to facilities and global warming.

So I think it's human nature to be scared of the unknown, and our ability to predict what the good and bad aspects of a new technology will be are actually very poor.

Susan Carland: Which is actually scary when you say it, because what it sounds like you're saying is, “Oh, you think the reality stuff, that's not going to be the worst of it at all. Wait till you see what the real problem is,” which is, that's frightening.

Geoff Webb: Well, yes and no. Also, we don't know what the amazing benefits are going to be. So cars have also had amazing benefits as well as their amazing harms. Think of ambulances.

Kamala Harris: In the greatest nation on earth, I accept your nomination to be President of the United States of America.

Donald Trump: And quite simply put, we will very quickly make America great again. Thank you very much.

Susan Carland: With major elections happening globally, we are seeing how emerging technologies can distort political reality. This tech is increasingly accessible, often trusted without question, and unfortunately open to misuse. The intersection of AI and politics is particularly fraught. Let's hear more from Ben Wellings on this.

How are you seeing AI interfere with our understanding of reality, again, when it comes to elections?

Ben Wellings: Here's a real-life, no paradox-intended example from the UK election. So there was a candidate called Steve AI who ran in the constituency of Brighton Pavilion. This was an AI person who'd been put on the electoral rolls. The AI person's creator lived in Blackburn, I think, up in the north of England, about 300 miles away, and was quite open about that, and just, “I don't live in the constituency, but Steve AI is there for you.”

Susan Carland: “Steve does.”

Ben Wellings: Yeah. And Steve apparently had certain advantages. He would always answer your emails. He's always available for comment and so on, but we can quantify public trust in this. He came dead last in that seat, even after the Monster-Raving Loony party. So the people of Brighton Pavilion obviously didn't have trust in Steve AI or AI more generally, we might say.

But the other point is that we don't know where interference has come about. I think it has potential to create that kind of sense of confusion that is tactically very advantageous in politics, and we might elevate that to grand strategy as well, if we think about international relations, too. And we know that certain state actors use disinformation, and then the misinformation comes about because people do follow the cues of their favourite politicians. I guess that's why we like them because they've done some of that thinking for us.

And so when it comes to an actual social situation in which we find ourselves, we've got the cues from other people who we trust and have done thinking about this to give us arguments, to give us ways of interpreting whatever it is that we are seeing.

Susan Carland: Steve AI is only the front-runner in a new world where AI and other emerging technologies affect everything we do, but Ben Hamer says that's not a bad thing.

Ben Hamer: There's a lot of bad stuff going on in the world right now, and that it's really easy for us to add these fear narratives around the robots coming to take our jobs and a whole lot of other stuff. I'm very much a big believer, instead of asking, “What if things go wrong?” Let's ask, “What if things go right?” And I think when it comes to technology, there's a real opportunity there.

Susan Carland: Next week, we'll ask, what if things go right?

Thank you to all our guests on today's episode. You can learn more about their work by visiting our show notes. We'll also link to our previous related episodes about the future of cybernetics and bias in AI. Have a listen.

If you are enjoying What Happens Next?, don't forget to give us a five-star rating on Apple Podcasts or Spotify and share the show with your friends. Thanks for joining us. See you next week.