As parents, our foremost concern has always been the safety of our children – especially in an age dominated by digital technologies, social media, and artificial intelligence.

For years, we have grappled with pressing online dangers such as cyberbullying, pornography addiction, harassment, and scams. Yet, despite ongoing discussions, many of these issues remain unresolved. Now, a new and deeply unsettling threat has emerged – deepfake technology.

While the term “deepfake” may sound recent, the technology itself emerged in the mid-2010s when AI researchers and hobbyists adapted generative adversarial networks (GANs) and other machine-learning techniques to create realistic face-swapped videos.

It wasn’t until 2017, however, that deepfake content truly gained traction, with Reddit users beginning to generate AI-powered synthetic celebrity pornography.

Today, the creation of deepfake videos is disturbingly accessible, thanks to user-friendly software such as Face Swap, DeepFaceLab, FaceApp, and Wombo.

Even mainstream platforms such as Snapchat and TikTok have integrated deepfake technology into their features, making AI-generated alterations more prevalent than ever.

The proliferation of explicit deepfake content has reached alarming levels, with reports showing a 550% annual increase since 2019.

Paedophiles are using AI to create and sell life-like child sexual abuse images, including of the rape of babies and toddlers, using AI software Stable Diffusion.https://t.co/dwHa6ea9KD

— Shayan Sardarizadeh (@Shayan86) June 28, 2023

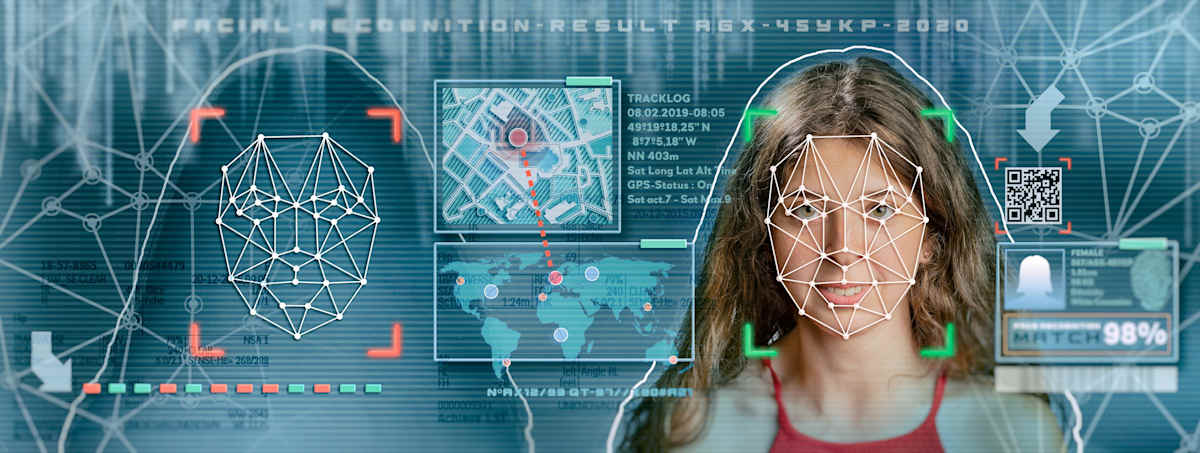

The rapid advancement of AI tools like generative adversarial networks (GANs) has transformed the landscape of online child exploitation.

Highly realistic yet entirely synthetic child sexual abuse material (CSAM) can now be created without direct victim involvement, making detection significantly harder and normalising exploitative content in dangerous ways.

With AI-powered video generators such as OpenAI’s Sora and text-to-image models such as Stable Diffusion XL capable of producing hyper-realistic media, the potential for misuse is growing.

Offenders no longer need access to real victims – they can generate and modify explicit content at scale, making it easier to produce highly convincing synthetic CSAM that evades traditional detection tools.

Even more disturbing is that pornographic deepfakes constitute 98% of all such material online, with an overwhelming 99% of these manipulated images exploiting women and girls.

This stark reality not only highlights the alarming rise of deepfake-fuelled image-based abuse, but also underscores its deeply gendered nature and the devastating toll it takes on its victims.

As this technology advances, so do the risks – particularly for young, unsuspecting users. The pressing question remains: How do we protect our children from this growing digital menace?

How AI is fuelling a new wave of child exploitation

A particularly alarming trend is the rise of “nudification” apps that use AI to digitally strip clothing from images. Some of the most infamous examples include:

- DeepNude – an AI-powered app that was shut down in 2019, but inspired a wave of similar tools.

- DeepNudeCC – a successor to DeepNude that continued circulating on underground forums.

- DeepFake Telegram Bots – a network of AI-powered bots on Telegram exposed in a 2020 report by security researchers at Sensity AI for generating explicit fake images of women.

While many of these apps claim to be for “fun” or “entertainment”, reports have shown they are frequently misused to target minors.

A 2020 investigation by Sensity AI uncovered a deepfake ecosystem on Telegram, where AI-powered bots enabled users to generate explicit images by “stripping” clothing from photos. This tool, an evolution of the infamous DeepNude, was used to create more than 100,000 non-consensual images, many depicting underage individuals.

Additionally, the National Center for Missing and Exploited Children (NCMEC) reported that in 2023, its CyberTipline received 4700 reports related to CSAM or sexually exploitative content involving generative AI technology. These figures highlight the escalating misuse of AI in child exploitation.

Many of these altered images originate from innocent social media photos posted on platforms such as Instagram and Facebook, where minors frequently share personal images.

AI-powered nudification apps can digitally remove clothing from these photos, transforming them into explicit deepfake content. These images are then disseminated across dark web forums, encrypted chat groups, and social media platforms, making detection and removal exceedingly difficult.

Beyond manipulated images, offenders are now using AI to create entirely fictional but hyper-realistic child avatars that are indistinguishable from real children. With deepfake video technology, AI-generated abuse videos could soon become a major challenge for law enforcement, complicating efforts to classify and prosecute CSAM.

This raises urgent questions:

- Should AI-generated CSAM be prosecuted the same way as real CSAM?

- How do we track abuse that technically involves no real child?

- Can law enforcement keep pace with the rapid evolution of AI tools used for exploitation?

Malaysia’s legal response: Strong laws, weak enforcement

Malaysia boasts one of the strongest legal frameworks in Asia for tackling both real and AI-generated CSAM:

- The Sexual Offences Against Children Act 2017 (SOAC) criminalises visual, audio, and written representations of minors in explicit content – meaning AI-generated CSAM is already illegal under Malaysian law.

- The Penal Code (Section 292) prohibits the distribution of obscene materials, including digitally manipulated images depicting child exploitation.

- The Communications and Multimedia Act 1998 empowers authorities to act against digital platforms involved in circulating synthetic CSAM.

- The Online Safety Act 2024 introduces a robust framework designed to shield minors from emerging technologies, including deepfake content—by mandating a licensing regime for digital platforms and enforcing strict content moderation standards.

While many countries are still debating how to regulate AI-generated abuse, Malaysia’s laws already cover synthetic CSAM. However, enforcement remains a major challenge; offenders operate anonymously using encryption, VPNs, and cryptocurrencies, making detection and prosecution difficult.

The digital hunt for AI-generated abuse

Even with robust laws in place, tracking and prosecuting synthetic CSAM remains extremely challenging. Law enforcement faces three key hurdles:

1. Encryption and the dark web: Offenders hide behind encrypted platforms such as Tor and use anonymous cryptocurrencies such as Monero, making it nearly impossible to track transactions.

2. AI outpacing detection tools: Traditional systems such as Microsoft’s PhotoDNA rely on matching known CSAM images, but AI-generated content lacks a real-world reference, rendering these tools ineffective.

3. Under-resourced cybercrime units: Investigating AI-generated CSAM requires advanced expertise, AI-driven detection tools, and global cooperation – resources that many law enforcement agencies currently lack.

Without improved AI-powered detection tools and stronger international enforcement mechanisms, Malaysia risks becoming a hotspot for synthetic CSAM circulation.

Bridging the enforcement gap

To effectively combat the growing threat of AI-generated CSAM, Malaysia must focus on strengthening enforcement while leveraging cutting-edge technology. Key measures include:

- Developing AI-powered detection systems: Law enforcement agencies need to invest in machine-learning models trained to identify synthetic manipulations, similar to deepfake detection tools used in combating misinformation.

- Stronger cross-border collaboration: Since AI-generated CSAM is not confined by borders, Malaysia must expand partnerships with organisations such as Interpol, develop ASEAN cybercrime task forces, and collaborate with technology companies to dismantle online CSAM networks.

- Holding tech companies accountable: AI developers and digital platforms must be regulated to prevent misuse. Companies producing deepfake and image-generation tools should implement strict verification and watermarking systems to deter illegal content creation.

- Enhancing digital literacy and public awareness: Parents, educators, and policymakers must be informed about the risks of AI-generated exploitation. Strengthening awareness programs and digital safety initiatives, such as those promoted by UNICEF, can help protect children from emerging online threats.

- Legal reforms and policy development: Legislation must evolve to ensure that AI-generated CSAM is prosecuted with the same severity as real CSAM, closing any existing loopholes that allow offenders to evade accountability.

Deepfake abuse beyond child exploitation

Recent high-profile cases demonstrate that deepfake abuse extends beyond the realm of child exploitation.

In a recent BBC report, Australian school teacher Hannah Grundy was horrified to discover a website filled with explicit deepfake images of her. These images, created by a trusted friend, were accompanied by violent rape fantasies and threats, turning her private life into a source of public torment.

The case has not only exposed the devastating personal impact of deepfake abuse on adults but has also set a landmark legal precedent in Australia. It underscores that the misuse of AI is a broader societal menace, affecting all age groups and demanding comprehensive regulatory and enforcement measures.

A new era of online child protection

The rise of AI-generated CSAM marks a dangerous turning point in digital child exploitation. With AI tools evolving faster than law enforcement can adapt, offenders are exploiting legal loopholes, digital anonymity, and weak detection systems to produce highly realistic synthetic child abuse material.

While Malaysia’s laws already criminalise synthetic CSAM, stronger enforcement mechanisms, AI-driven detection strategies, and international cooperation are critical to preventing this form of exploitation from spiralling into an unchecked global crisis.

The solution demands urgent collaboration among governments, AI developers, law enforcement, and the tech industry. Without proactive measures, the next generation of AI models will make synthetic CSAM even harder to detect, easier to produce, and more widespread than ever before.