TikTok may have sparked cybersecurity and privacy concerns in the West, but the app has been successful in Southeast Asia, transforming from a mere entertainment platform to a thriving e-commerce hub with a staggering 325 million monthly active users in the region.

Yet the platform's approach to handling harmful content, including disinformation, hate speech and propaganda, has attracted widespread criticism from governments and civil societies alike.

TikTok has often come under fire for allowing controversial content to proliferate, especially during election periods.

During Malaysia's 2022 general election, TikTok failed to detect and remove disinformation, propaganda, and political content, resulting in the emergence of alarming trends such as the #13mei hashtag.

This refers to the 1969 racial riots between the Chinese and Malays, featuring racist content calling for street protests if Chinese-dominated parties such as the Democratic Action Party were to win the election.

In response, Malaysian authorities summoned TikTok and demanded swift removal of such content. Although TikTok did remove thousands of contentious posts, many still remained on the platform.

A study conducted during Malaysia's 2022 general election tracked 2789 election-related videos using selected keywords from November to December.

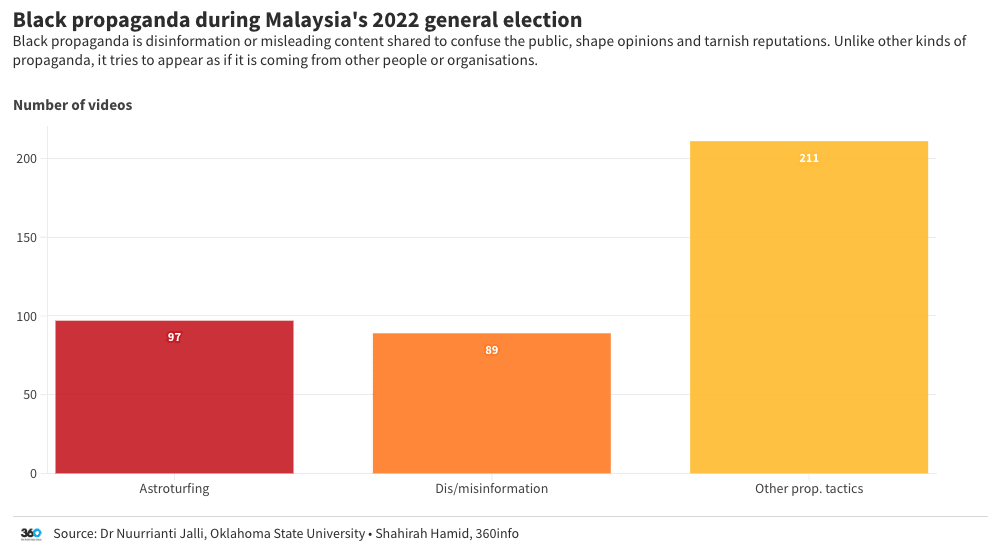

It found that nearly 400 videos contained black propaganda, defined as disinformation or misleading content shared with the intention of confusing the public, shaping opinions and tarnishing reputations.

Of these, 97 were identified as “astroturfing videos”, a form of propaganda where participants disguise themselves as regular citizens acting independently. Despite TikTok's policies, some of these videos were still on the platform as of June 2023.

Despite explicit policies, TikTok's perceived failure to effectively remove harmful content during Malaysia's 2022 general election has raised concerns, particularly with upcoming elections in other Southeast Asian countries such as Indonesia next year.

Preliminary research on TikTok's role in Indonesia's election has already uncovered propaganda videos, including disinformation inciting ethno-religious hate, available on the platform ahead of the election.

Propaganda techniques, including priming, are used by loyalists and buzzers (or better known as cyber troopers) to circulate hate-filled content in local languages, and have been discovered on the platform.

Politically-biased ideas spread through localised content in local languages highlight the platform's limitations in promptly identifying such material.

Another study revealed pervasive Russian propaganda infiltrating the Indonesian TikTok landscape, with such materials blatantly violating TikTok's guidelines.

Many news-like TikTok videos used materials circulated by pro-Russia propaganda sources. Those videos are uploaded by independent accounts that are not affiliated with official media institutions.

These accounts have the potential to confuse their audiences, making it difficult to determine the reliability of the information they present. Such content can mislead the public and lead them to believe in misinformation.

Combating disinformation on TikTok presents significant challenges due to its video-centric nature.

Unlike text-based content, fact-checking on platforms such as TikTok requires evaluating multiple elements such as text, sound, images, and videos. The fact-checking process must understand the interplay between these elements and identify any misleading connections.

Developing a machine-learning fact-checking system for TikTok involves transforming each media file into text and analysing it for potential deceptive narratives. While individual components may appear legitimate, their combination can result in misinformation or disinformation.

TikTok claims to partner with global fact-checking organisations, but not all these entities possess the capacity to handle content quickly.

For example, during the 2019 Indonesian election, one candidate orchestrated a disinformation campaign that overwhelmed fact-checking organisations.

Besides, TikTok's system may not be able to promptly identify localised or politically charged disinformation and propaganda conveyed in local languages.

This has been evident in significant events such as the protests during Myanmar's 2021 military coup, the Philippines election in 2022, and, most recently, Malaysia's last general election.

In response to the inadequacy of existing measures, some regional governments may consider imposing a ban on TikTok if it is deemed to cause significant harm and fails to adequately moderate harmful content.

However, such a move could also infringe upon freedom of speech and expression.

The problem can be fixed

It’s crucial to acknowledge that engagement serves as the driving force behind social media platforms. Controversial and challenging information tends to attract more attention and generate greater engagement.

Considering this, TikTok and other platforms could establish a system of rewards and penalties for its users.

Currently, TikTok's algorithm "penalises" creators who don’t post regularly, offering them reduced visibility and fewer chances of featuring on the "For You Page" (FYP).

However, this could inadvertently incentivise content creators to prioritise quantity over quality, potentially amplifying the spread of misinformation.

Alternatively, TikTok could encourage the creation of high-quality, fact-checked educational content by rewarding creators with incentives such as financial bonuses or increased visibility on the FYP.

Additionally, TikTok could establish a reward system to encourage users to report instances of misinformation.

If the reported content is validated as misinformation by TikTok's moderation team, users could earn points. These accumulated points could then be used for purchases from approved merchants within TikTok's e-commerce ecosystem, hence offering a real-world reward for users’ proactive moderation efforts.

In addition to its ongoing efforts, TikTok could enhance transparency in its moderation strategies, policy updates, and progress. This could involve publishing detailed transparency reports and actively engaging in dialogue with users and stakeholders.

TikTok could also strive to strengthen partnerships with local NGOs, particularly those with expertise in local socio-political dynamics and languages.

All users, including academics, NGOs, government agencies, and media practitioners, can significantly contribute to improving TikTok. They can use the platform as a primary media outlet for countering propaganda and the dissemination of fact-checked information.

Given TikTok's extensive reach, it has the potential to be a powerful platform for promoting informative and positive counter-narratives. However, this requires careful and strategic planning from content creators, NGOs, and governments.

Journalists and media organisations also have a pivotal role to play. They can leverage the platform to disseminate accurate information, debunk misleading narratives, and hold TikTok accountable by scrutinising and reporting on its policies and their enforcement.

This article was co-authored with Nuurrianti Jalli, an assistant professor at the School of Media and Strategic Communications at Oklahoma State University. It was originally published under Creative Commons by 360info™.