OpenAI has just released ChatGPT-4, the latest iteration of its powerful AI text generator. The tool is capable of generating convincingly human-like responses to almost any question users put to it.

It can write limericks, tell jokes, and plot a novel. And it can draft a convincing response to almost any question a high school teacher or university lecturer might ask students to write about.

Previous iterations of OpenAI’s GPT language models would often generate text riddled with strange and obvious mistakes. ChatGPT (and particularly GPT-4) is less prone to these problems. The responses that ChatGPT generates are apparently capable of passing exams across many disciplines.

It’s tempting to think we’ll always be able to distinguish between the work of AI and the work of humans, particularly when it comes to distinctly “human” tasks such as creative writing, careful reasoning, and drawing novel connections between different kinds of information.

Unfortunately, this optimism is misguided. AI-generated prose and poetry can be beautiful. And with some clever prompting, AI tools can generate passable argumentative essays in philosophy and bioethics.

This raises a serious worry for universities that students will be able to pass assessments without writing a single word themselves – or necessarily understanding the material they’re supposed to be tested on.

This isn’t just a worry about the future; students have already begun submitting AI-generated work.

Cheating bans hard to enforce

Some institutions treat the use of AI text-generators as “cheating”. For example, public school systems in NSW and Victoria have banned ChatGPT.

But such bans will be hard to enforce. Compared to traditional forms of plagiarism (where plagiarised work can be compared directly with the source material), student use of AI-generated text is hard to detect – and harder still to prove, in part because new ChatGPT generates new responses each time a user inputs the same prompt.

One might hope the problem is just temporary. For its part, OpenAI (alongside other developers) is developing tools to detect AI-assisted “cheating” – though such tools are prone to making mistakes, and can at present be circumvented by asking ChatGPT to write in a style that its own detector is unlikely to catch.

OpenAI is also developing a technique for “watermarking” AI-generated text. This works by influencing which words the AI chooses, leaving subtle quirks in the writing that can be detected further down the track.

But this strategy also has serious limitations. Lightly editing AI-generated text would presumably overwrite these watermarks. And students could seek out alternative AI tools that don’t watermark AI text.

The educational challenges of AI text generation are here to stay.

Adapting won’t be an easy process

Generative AI tools such as ChatGPT are poised to make far-reaching changes to how we approach writing tasks.

These tools are exciting. Among other things, they’ll make some tedious and difficult parts of the writing process easier (including for students for whom English is a second language).

But adapting to a world where AI can write compelling student essays will not be straightforward.

Sam Altman, the CEO of OpenAI, has compared the release of ChatGPT to the advent of the calculator. Calculators brought about enormous benefits; ChatGPT will, Altman claims, do the same.

Schools have adapted to calculators by changing how maths is tested and taught; we now need to do the same for ChatGPT.

This analogy seems more or less right, but its implications are less reassuring than Altman intends. The task of adapting to calculators has been long and arduous, and it remains unclear how best to incorporate them into the classroom.

Mathematicians continue to raise plausible worries that overuse of calculators impedes the development of important forms of “mathematical thinking”.

Rather than comforting us, the parallel with calculators should alert us to the magnitude of the task we face.

The two main threats

We see two main threats posed by tools like ChatGPT.

The first is that they’ll produce content that’s superficially plausible but entirely incorrect.

AI outputs can thus leave us with a deeply mistaken picture of the world.

Contrary to appearances, ChatGPT is not “trying” (but, often, failing) to assert facts about the world. Instead, it is (successfully) performing a different task – that of generating superficially plausible or convincing responses to a prompt.

In humans, we would describe this kind of behaviour as “bullshitting”. The term also aptly describes many AI outputs.

Read more: ChatGPT: We need more debate about generative AI’s healthcare implications

The second worry is that reliance on these tools will result in the erosion of important skills. Essay writing, for example, is valuable in part because the act of writing can help us think through difficult concepts and generate new ideas.

Good arguments often emerge in an iterative fashion over several drafts, where the final version looks quite different to what one envisaged in their original plan.

Writing, so understood, is not just an act of translating our thoughts onto the page; for many writers, it’s part of the reasoning process. And if we begin to outsource the writing process to artificial intelligence, it’s a skill students might lose if they come to rely on AI text generators.

Addressing the challenges

How, then, should we meet these challenges? In these early stages of the introduction of generative AI, educators may feel overwhelmed by the rapidly changing technological environment, but students are also coming along for the ride with us.

Being transparent about decisions we make regarding when and how AI tools are used in assessment could help ease anxiety on both sides.

We suggest four approaches.

Teach students how (not) to use AI text generators

ChatGPT can be a useful tool. It can, for instance, help generate ideas and get words on the page.

The worries about misinformation are serious. But these are best addressed by teaching students how to use these tools, how to understand their limitations, and how to fact-check their output.

Fortunately, the core skills cultivated by a good education provide a strong foundation for this project. Teaching students how to read critically, how to evaluate or corroborate evidence, and how to distinguish good arguments from bad, are things universities should be doing already.

One approach might be to develop specific assessment tasks where students generate, analyse, and criticise AI outputs.

While such tasks might have some role to play, we would caution against placing generative AI at the centre of education. There’s no shortage of bad arguments being put forward by human beings. We should teach students to recognise bullshit wherever it appears.

Continue efforts to design ‘authentic’ assessments

We should remind ourselves that for most, choosing to participate in higher education comes from a genuine interest in a subject.

This fact may go some way towards mitigating the temptation to outsource their studies to AI, particularly when the value of completing this work is clear to students.

By designing assessments that are relevant to students’ future careers, and clarifying the purpose of tasks in relation to their own development, we can encourage learners to engage with assessment in the way we intended.

Assessment that engages with, and leverages, students' interests could motivate learners to remain engaged such that they don’t see value in outsourcing the pursuit of their own knowledge to AI.

This is a desirable way of designing assessment, both as a response to ChatGPT and as a general goal for higher education.

But while this strategy may undercut some students’ desire to turn to AI, it’s unlikely to fully dissolve the temptation to save time by having an AI write your essay – particularly where doing so would not lower (and might even raise) your final grade.

Additional strategies are needed.

Balance essays with other types of assessment

A key worry about AI text-generation is that students won’t actually understand what they appear to, given the work they’ve submitted.

This concern can be met by balancing written work with other kinds of assessments.

In particular, in-person oral presentations cannot be taken over by any algorithm, and so may be an ideal option (provided, of course, that any increase in workload for teaching staff is supported by the institution).

Supplementing traditional essays with other assessments need not come at the expense of good assessment design.

To the contrary, there are good educational reasons to vary written work with these other kinds of assessment; oral communication skills are enormously valuable across a range of professions.

At the same time, writing skills are poised to become less important as ChatGPT and tools like it start to play a major role in how text is produced, both within and outside the classroom.

Develop AI-resistant written assessments

Another strategy involves designing assignments where students are either required to demonstrate their own understanding (independently of written work they’ve submitted), or where AI-generated bullshit can be easily detected.

The revival of pen-and-paper exams is being considered by Australian universities.

This strategy may have a role to play, but it would come at a cost. We’re amid a shift away from pen-and-paper examinations to “authentic” assessments – that is, assessments that evaluate skills students will employ in real-world settings.

Few workplaces require their employees to write detailed discussions of difficult questions by hand, in isolation, and without the ubiquitous modern conveniences of an internet connection and a word processor.

Read more: Rising from the ashes: Higher education in the age of AI

An alternative is to combine written essays with the presentation and discussion of this work during class time, potentially modelled after the format of a viva presentation or thesis defence (albeit made gentler and shorter according to the cohort being taught.)

The viva format requires students to understand, and be able to communicate, the ideas they’ve defended in their essay – regardless of whether they outsourced any of the writing to an algorithm.

Finally, there’s some scope to develop essay questions that ChatGPT is unlikely to perform well on.

In our own experiments, we found ChatGPT can generate convincing responses about major works in our respective disciplines.

However, it fares very poorly when asked about the cutting edge of scholarly debate, since the corpus of work it was trained on contains much less (if any) discussion of this work.

When asked to reference its claims, it’s prone to “hallucinate” sources that don’t exist.

Assignments that ask for deep research on recent scholarly developments are, for now, relatively AI-resistant. But this strategy will need to be continuously reviewed as AI language models continue to improve.

Picking the right tool for the job

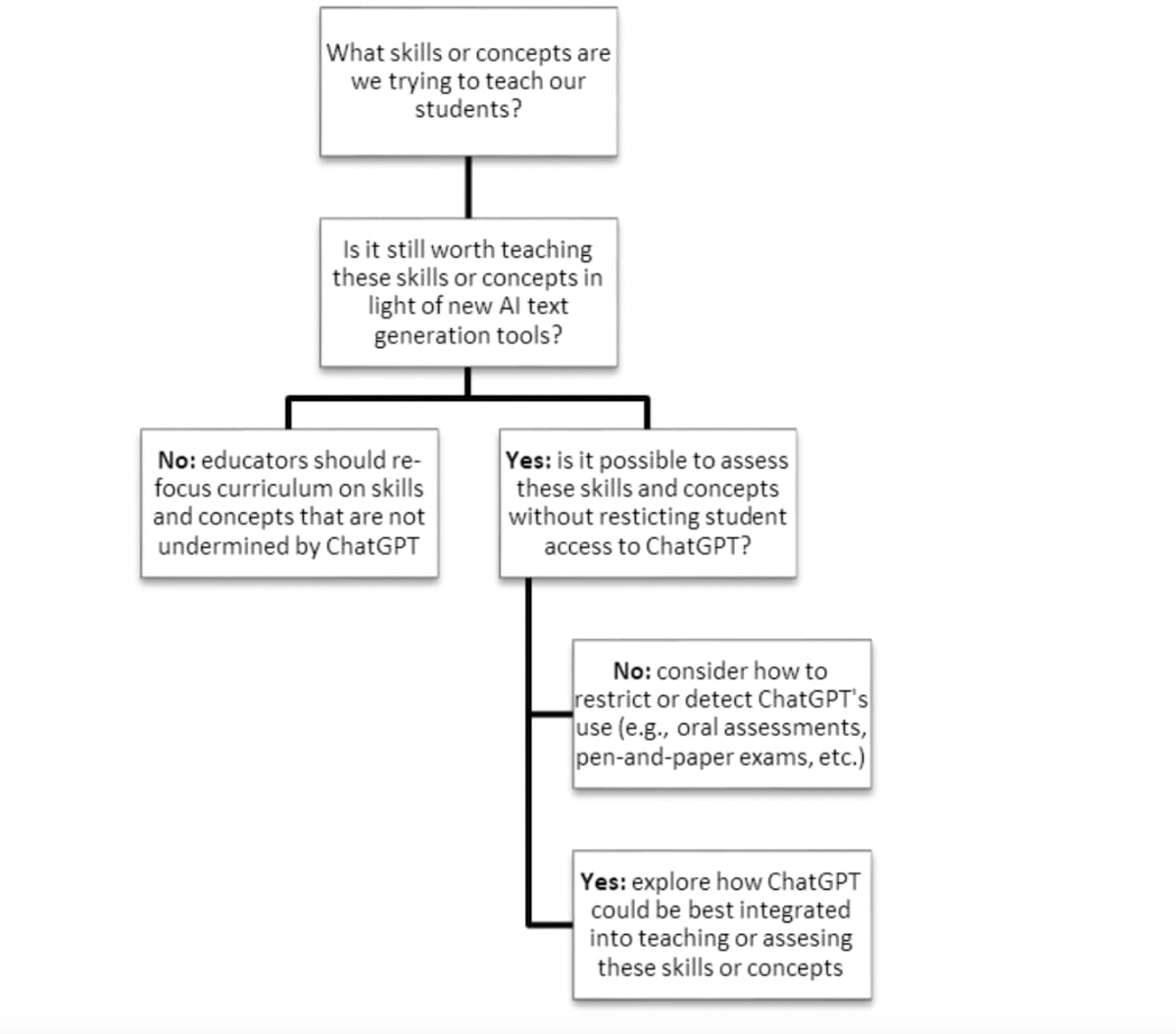

Assessment regimes should be tied to the educational goals we’re trying to achieve, not a knee-jerk reaction to new technologies.

We recommend that educators considering how to adapt their assessment regime in light of ChatGPT ask themselves the following questions:

These suggestions differ from some existing responses, which centre on detecting or preventing the use of AI tools.

This is for a good reason. Our students will enter a world where the use of such tools will likely be commonplace. Indeed, ChatGPT has already been listed as an author on recent academic papers, and utilised by journalists and authors.

We need to prepare students for a world where tools like ChatGPT are readily available – and to teach them how to use these tools well.

Read more: ChatGPT: Old AI problems in a new guise, new problems in disguise

In the longer term, progress in generative AI seems likely to pose even more profound challenges to educators.

In the not-too-distant future, ChatGPT and tools like it may become better than most humans at writing in many genres and on many topics.

It’s far from clear whether students will bother to learn to write in such circumstances – or whether employers will care whether they do.

Given the connection between learning to write and learning to think, this is a disheartening prospect.

Moreover, it’s almost certain generative AI will become capable of tasks that many educators think of as central to their own profession. ChatGPT can already generate useful feedback on written work.

Non-profit education organisation Khan Academy recently announced it will be launching a GPT-4 powered AI-assistant, Khanmigo, which can play the role of a tutor to students, and can also assist teachers with tasks such as creating lesson plans.

Dystopian visions in which AI teachers set tasks that students then farm out to AI look all too plausible.

The immediate challenge for educators is to determine what an AI-literate skill set looks like, and how to evaluate whether students have these skills, especially when many of us are new to these skills ourselves.

If we can get this right, perhaps we’ll be able to continue to teach and assess the core skills of research, reflection, argument, and critical thinking that have traditionally sat at the heart of the university.

The deeper challenge posed by the “threat” of AI is to imagine what education would look like should the tools available to us relieve us of the need to exercise these crucial skills.