A chat with Dr Elahe Abdi, a Monash University robotics specialist, is a glimpse into the future. The problem is, the future keeps getting closer and closer, to the point where we might now think: Is this, in fact, the future?

In collaboration with Associate Professor Dewi Tojib from Monash Business School, Dr Abdi has led a team of researchers looking into service robots, and how humans react to them.

It turns out if these robots, built to help humans with simple tasks, are too similar to humans, the humans get scared and won’t use them. But with the passing of time, humans are becoming less sceptical. Which means the imagined future is actually happening, in real time.

Lens spoke to Dr Abdi, from the Department of Mechanical and Aerospace Engineering, about the paper, published now in Nature’s Scientific Reports.

First up, where might a service robot be put to work?

One very good example is in medical settings in hospitals. A potential application that we’re keen to explore further is, for example, for patients after a heart attack or after a stroke at the hospital, usually they require information about changes they can make in their life to prevent secondary occurrences.

It’s the responsibility of the medical staff such as nurses or the doctors in the hospital to provide this type of information, but because of the workload that they have, the advice can be variable. These types of robots can be placed in hospitals to interact with patients, and provide them with the information they require.

In that type of setting, you want the robots to be persuasive, and you want the patient or the user to follow the advice. So as researchers, it's important for us to know what type of interaction will be most effective for the patient.

Where else?

Education, especially when we think about younger students. Sometimes it might be challenging for teachers to keep them engaged, and then the addition of the robot into the context can actually add an engagement component and make things more interesting, I guess, and appealing to the students.

Also in aged care and industrial settings, and also tourism and hospitality.

Do some hotels already use them?

The most successful examples are in tourism settings like hotels. There are a couple of hotels in Japan that actually use service robots for greeting. They show the room to the guests, things like that.

Another example is in restaurants – robots that can bring your order to your table, or even cook your food. In Melbourne’s CBD there was an ice cream store (now shut) making the ice creams using robots, and then serving the ice cream using robots.

But what we’re talking about here is the application of robots in medical or education settings, which is about the user’s benefit.

Read more: When can I get my new household robot?

Your study found that people in a tourism setting liked service robots with human-like qualities. In other words, anthropomorphism of the robots.

Yes, exactly. The research is based on the idea that now that we have different types of robots with different levels of anthropomorphism, how does that affect how customers interact? Would it affect how willing they are to follow the robot’s recommendations?

We found that the answer is yes, and we also observed that this is because customers perceive a high anthropomorphised robot to have a higher perceived mind that leads to higher persuasiveness compared to a low anthropomorphised robot.

What was the level of anthropomorphism that encouraged people to follow recommendations?

The voice capability was important, but we found the looks of the robots seemed to be more important than the voice. So it’s a combination of voice and looks, but the combination of these factors is important as to how anthropomorphic that robot is perceived.

But if a service robot is too human, people get scared?

The conclusion that we make here is anthropomorphism is good, but then we need to also be aware of something which is called the “uncanny valley”, where if a robot becomes too realistic it becomes creepy and maybe scary for the users.

So currently, what we see in the state-of-the-art is that you want to have robots that look human-like enough, but you want the user to still be able to know it’s a robot. If they cannot say whether or not it’s a robot, and if the line between human and robot becomes blurry, usually that becomes a repelling effect.

This is a very complicated area, because there isn’t a “yes” or “no”. There is a grey area, and it also very much depends on the context – it depends on who is interacting with the robot, it depends on which country you are in, what is the cultural and religious background of the users.

To answer this question alone, we need to work with psychologists, people from marketing and business, and those that are specialists in cultural and religious knowledge, and science and philosophy as well.

How did the humans interact with the service robots for your study?

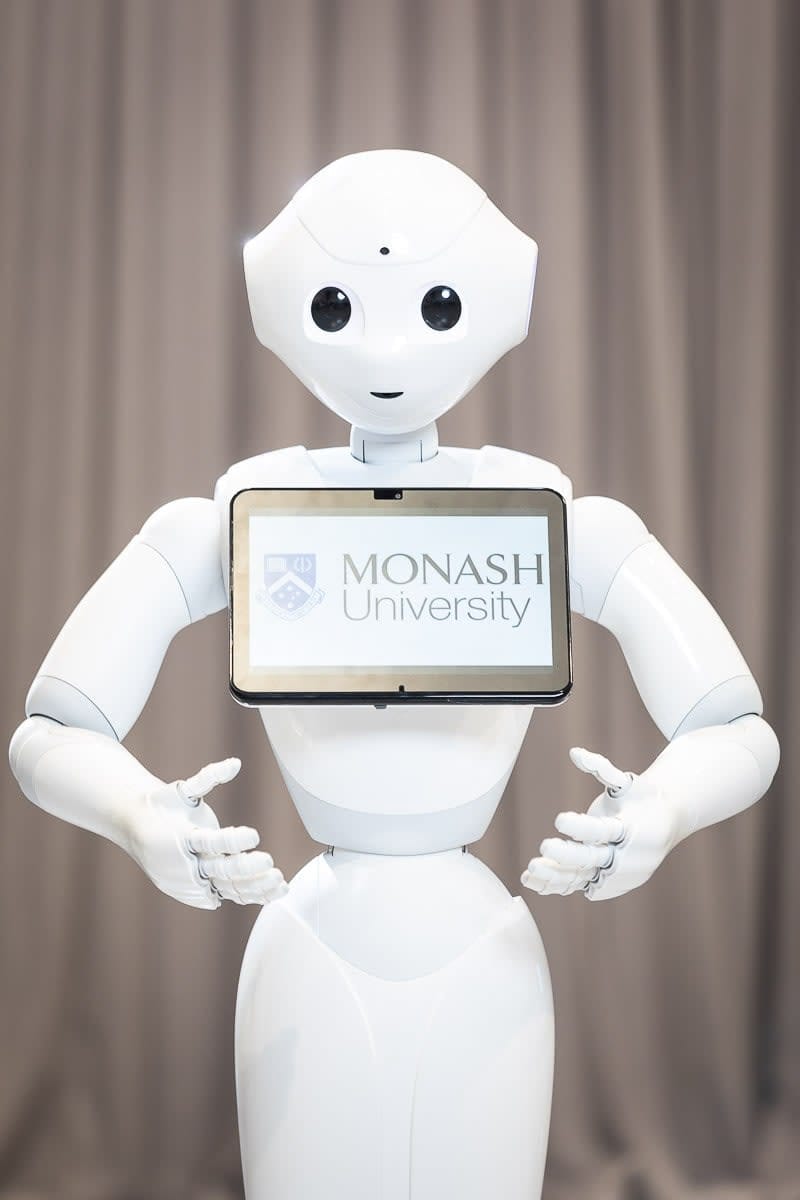

We designed this experiment before COVID. It was going to be in-person, but we switched to online. It’s set in a hotel lobby, and we have Pepper the robot here in our lab, so we used a 3D model of Pepper, and of the participant, using the Unity game engine.

We give the control of the mouse to the user who is on Zoom interacting. They can walk in this lobby environment, go to the robots, stand in front, they have the first-person view, and they can converse with the robot.

The robot starts the conversation by greeting them: “Hello, welcome, can I help you today?” And then there is the interaction for the user. The user doesn’t speak, but they see a couple of choices that they can choose from.

So, for example, the user could choose, “OK, I am here for one day today and I want to know what are the attractive places that I can visit.”

What were you looking for in particular?

We were hoping to see the effect of two things – the anthropomorphism level and the language style. And we could see the effect of anthropomorphism, but we couldn't see the effect of the language style.

By that I mean that we had two types of conversations from the robot. One was very subjective. The robot basically would say, “Go to this place because it has high ratings.” The other was objective, or more emotive – “Go to this place because I have been there and I have enjoyed it.”

We were curious to see if there’s any difference between these two. From a user’s point of view, I would have expected the robot to deliver informative information, not emotive information, because the robot doesn't have any emotions.

But we didn't see this in the study. This is because of different perceptions that the users have – maybe sometimes users don’t find it very strange that the robot is showing some emotion, or maybe they’ve been interacting with things such as Siri or the like.

Maybe it’s become common enough for a robot to speak with some level of emotion. We didn't see any difference from the users between informative and emotive language from the robot.

What does that tell you?

It tells me that people are getting used to robots, and they’re becoming receptive to a certain level of emotion displayed by a robot.

Maybe 10 years ago, it was very strange for people to see a machine with some emotion or subjectivity. But now it seems that it's becoming a norm, and people don’t become alarmed as much as before.

It might also show that in 10 years from now, the “uncanny valley” that I talked about might shift.